Help! My Boss Doesn't Think Usability Testing is Worth It

Once you catch the vision for what usability testing can do for a company, it's painful to be forced to design and develop blindly. If you're facing the need to get buy-in from management or your team for usability testing, this post is for you.

Don't give up. Ever.

We all know that you have to pick your battles. This is one that must be picked, and must be won. Usability testing isn't just a really good suggestion that your company or team should try. It's the right way to launch better, faster, and with the least amount of wasted resources. Analytics alone aren't actionable; but once you see the human experience behind the data, you can determine precisely where to spend your resources.

Don't let anyone convince you that this battle isn't worth fighting, and don't let anyone wear you down.

Of course, getting buy-in doesn't have to be filled with conflict; but it's best to be prepared just in case. After all, if you weren't encountering or expecting any resistance right now, you probably wouldn't be reading this. So we're going to prepare you for both offense and defense.

While getting management buy-in for your usability testing initiative may seem a battle of sorts, you don't have to dress up in chainmail and wield a mace. But if you do, please send us a picture.

Offense

If you've already tried to get buy-in for usability testing and have been shot down, you might need to start with the Defense section. But if you're in a position to make the pitch, here's what we recommend.

Breaking the ice

The best way to make the case for usability testing is to let your users do it for you. It shouldn't take many users to make the case either; 3-5 should do it. (You might even qualify for one of our free proof-of-concept studies; contact sales@usertesting.com to find out.)

Keep the results laser-focused by creating a highlight reel of the most engaging insights. Hearing and seeing actual user experiences on your site or app is incredibly compelling.

Let's look at a real example. Imagine that your boss or team is in charge of Spotify, and doesn't yet understand the value of user testing. Here are a few clips from just 3 users, revealing problems or concerns that users are encountering—problems you might never identify without a usability test.

The best way to make the case for usability testing is to let your users do it for you.

Want to give your presentation some extra punch? Run a test against your competition. Have each tester try performing the same functions or answering the same questions on your app or site vs. one or two competitors, and show the results. You might discover that you have a competitive advantage you didn't know about, or you might learn why your competitor is winning some customers that you're not. And you'll definitely get some good ideas along the way.

One last thought: sometimes the best way to break the ice is to chip away at it. Start taking 45-90 second highlight reels of usability studies into your weekly executive meetings. It shouldn't take long for the CEO to start giving you resources to fix the issues. It's much more difficult to ignore videos of users struggling, than it is to ignore analytics.

Speak the right language

Use terminology that your team understands and cares about. Empathize with them, and use their own frame of reference when making your pitch.

Clearly articulate the connection between usability and the KPIs management is concerned about right now. The connections may seem crystal clear to you, but not everyone gets it.

For example, if you're trying to convince the marketing manager that usability testing is a solid investment, talk about outcomes she cares about, like "more sales," "easier to buy," "increased revenue," or "improved brand loyalty." While "easier to use" resonates with UXers, it could be completely the wrong term for some on your team.

If you want your audience to listen, use terminology and outcomes they use every day.

If there are some hot button issues on the table right now, such as poor retention or low customer satisfaction, clearly articulate the connection between usability and the KPIs that management is concerned about right now. The connections may seem crystal clear to you, but not everyone gets it. Enterprises will benefit from features like monthly qualitative KPI benchmarking that our new Customer Experience Analytics feature makes possible.

Champion validated learning

In order to calculate the ROI for usability testing, it's tempting to look at only hard numbers like increased revenue or improved conversion rates. "We spent $1000 to identify bug x, we fixed it, and conversion increased by 1.2% to bring in an extra $3000 in monthly revenue." Beautiful. Simple. But you're not always going to get numbers like that; and when you don't, it doesn't mean usability testing was a waste of money.

Therein lies the extra value of usability testing: you learn something every time you test, and learning itself is valuable.

In fact, usability testing can help measure lagging indicators like UX work, which can often take 3, 6, or 9 months to show up in analytics.

Therein lies the extra value of usability testing: you learn something every time you test, and learning itself is valuable. Sometimes that value can be calculated, but often it's less tangible. Examples:

- Surprise competitive advantage. You run a competitive test, and you find no significant usability problems on your site this time around. But you learn that your customers can check out 30% faster on your site than on your competitor's site. You now know about a competitive advantage (which you can possibly leverage in marketing), and you know about a new threat (if your competitor figures out their checkout problem, they might gain some customers back).

- The feature nobody wanted. When conducting market research or running a competitive test, you learn that customers hate x. You were thinking about implementing x; now you won't. You’ve saved time, effort, and customer frustration.

- Guidance for launch timing. Your team is having a debate about whether feature y is ready to be launched, because you know it's not finished. A usability study reveals that customers like the feature, and aren't getting stuck, even though the feature isn't complete. The knowledge from this test may or may not result in a tangible revenue boost, but it does give your team confidence to move forward, which saves time and energy that would have otherwise been spent on the debate.

There are many more examples, but the point is that you can raise the awareness of learning as a valuable outcome of usability testing.

Defense

To defend against any resistance, arm yourself with answers to common objections and usability testing myths.

Myth: We don't need usability testing; we have analytics

As a usability testing fan, you've got this one covered. You know that analytics tells you what happened; usability testing tells you why. That's completely true, but that line might not work on someone who loves analytics—unless you have an example.

StubHub's Go Button story is a great example of what usability testing can uncover that analytics can't.

Uncovering your own example is best, but StubHub's "Go Button" story is compelling too. During usability testing, StubHub discovered that the "See Details" link on their event pages was preventing a lot of people from clicking. (Analytics can indicate that people are abandoning the funnel here, but it doesn't explain why, and it certainly can't tell you what to do about it.) Replacing that ambiguous link with a clear "Go" button resulted in millions in increased revenue. Usability testing showed them why people weren't converting, and gave them a clear path forward.

Myth: Usability testing is expensive

This one is almost too easy these days, but we know this myth still lives on in some organizations, so here's the 3-part answer:

- The value is incredibly high. Let's get down to the root issue here. If somebody tells you they don't want to do testing because the cost is too high, what they're really saying is that they don't believe testing is valuable. After all, is your company paying rent? How about insurance? Those costs are high, but nobody uses that as an excuse to drop those expenses, because they're viewed as essential.That's where to start the conversation about the cost of usability testing; remind people that usability testing is one of the best ways to achieve amazing results—like identifying conversion killers so that you can dramatically increase revenue. If the value is that high, the cost is negligible in comparison.

- The cost is (now) low. Sure, there was a day that the logistics of usability testing made it daunting, and even expensive. With today's testing tools and recruiting options, that's no longer true. In about an hour, you could have a list of tools, prices, and recruiting options that can squelch any complaints about the cost of testing. Lay out a plan with hard numbers so that nobody can hinder your plans by throwing out ridiculously high estimates.

- The alternative is expensive. What's really expensive is to use engineering resources (the biggest constraint in many companies) to build, and then rely on analytics to tell you whether it was the right thing to build, and whether it's working. The opportunity costs associated with building the wrong thing easily eclipse the cost of testing.

Confront any lingering ideas about usability testing costing thousands of dollars per study.

Myth: Usability testing is too complicated and time-consuming

This is mostly a concern of small businesses. Large enterprises typically have the resources to tackle usability testing; it just becomes a question of priority for them (which all of the points made above should address). But sometimes the in-house marketing/UX/engineering team's time would be better spent on fixing problems than finding them. That's why our enterprise plans come with access to a research team who can conduct usability studies and even watch the videos and summarize the findings.

But back to small businesses. Is usability testing too complicated and time-consuming for them? Eric Ries, pioneer of the Lean Startup movement, doesn't think so.

I scheduled UserTesting.com sessions, making sure that I got participants in all the main branches of the experiments. Within a few hours, I had a dozen 15 minute videos of people using the product. The entire process, including analysis, took about one full day. —Eric Ries

Lean startups wouldn't embrace usability testing if it were a complicated and time-consuming way to get feedback.

Steve Krug, author of the popular Don't Make Me Think, says that UserTesting.com “Requires almost no effort and gets you results incredibly quickly."

Lean startups wouldn't embrace usability testing if it were a complicated and time-consuming way to get feedback.

Let's take a look at what it actually takes to run a simple study once you've determined what you want to learn. The numbers can vary widely here, but these are ballpark estimates for a straightforward 15-minute test with 5 users from the UserTesting.com panel:

- Write the tasks and questions (20 minutes to write them; 2 minutes to input them during the test creation process)

- Specify demographics (1 minute)

- Complete and order the test (2 minutes)

- Eat lunch (wait 1 hour for the video results)

- Watch the videos at 1.5x speed. Re-watch some portions, annotate, and create clips to share. (90 minutes)

Total of your time: 2 hours

Total time from start of project to the time you're ready to present your findings: 3 hours

Objection: We don't want to undo the work we've done

Ok, this one's tricky. It's such a ridiculous objection, that nobody is going to say it out loud. You have to listen to the subtext in order to discover this objection within your company. When you find out that people are resisting testing because they can't come to grips with the possibility of "wasting" work that's already been done (even if usability testing reveals that it needs to be tossed), you need to appeal to Eric Ries's story once again.

Here's an excerpt in which he explains the value of learning early in the process. He even talks about throwing away thousands of lines of code.

For anybody who resists discovering problems after an initial investment of design or engineering time, you might need to remind them of the obvious: Any problems in your product or site will be discovered at some point—either before launch when you test, or after launch when sales dip or customers complain or move to your competitor. Putting your head in the sand doesn't work. It just delays the inevitable, and at a high cost.

Putting your head in the sand doesn't work. It just delays the inevitable, and at a high cost.

Myth: We can keep the testing budget at rock bottom thanks to the 5-tester rule

You might encounter managers or team members clinging to the popular notion that you need to test only a small number of users to catch all the critical problems.

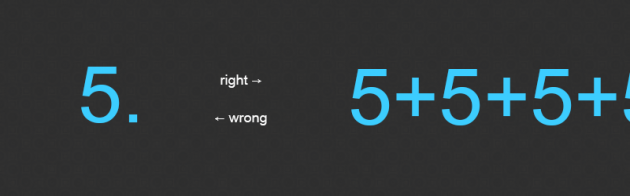

It's true that you can uncover a lot of actionable information with just a few users, but an iterative approach is best. In Jakob Nielsen's often-referenced article, Why You Only Need to Test with 5 Users, Nielsen actually recommends running multiple 5-user tests.

Jakob Nielsen suggests tests of 5 users at a time, not 5 users total.

"Why do I recommend testing with a much smaller number of users? The main reason is that it is better to distribute your budget for user testing across many small tests instead of blowing everything on a single, elaborate study. Let us say that you do have the funding to recruit 15 representative customers and have them test your design. Great. Spend this budget on three tests with 5 users each!" – Jakob Nielsen

There are three main factors to consider when determining how many testers to budget for:

- How confident do you need to be that you've uncovered enough problems? Jeff Sauro, in Why You Only Need To Test With Five Users (Explained) sheds light on the math behind the 5 user discussion. He recommends, "As a strategy, pick some percentage of problem occurrence, say 20%, and likelihood of discovery, say 85%, which would mean you'd need to plan on testing 9 users. After testing 9 users, you'd know you've seen most of the problems that affect 20% or more of the users. If you need to be surer of the findings, then increase the likelihood of discovery, for example, to 95%. Doing so would increase your required sample size to 13."

- How many functions, pages, levels, etc. do you need to test on your site or app? If you're running a small website that testers can exhaust in a single test, then running 3 studies of 5 users each might be adequate. But if you have multiple departments, landing pages, a shopping cart, account management functions, etc., you have multiple sets of studies to run.

- How often are you changing your site or app? Changes are imperative, but they have the potential to introduce new problems. If your site or app aren't standing still, neither should your testing plan. Test, iterate. Rinse, repeat.

We don't have time to iterate based on the feedback we receive

Has this happened to you?

Jim: We really should run some usability tests on this.

Steve: We might not make the launch deadline as it is. We don't need another to-do list.

Much of what we've already talked about can be used to get Steve on board, so we'll just focus on a few additional points specific to his objection:

- Testing during the design and prototyping stages can save a significant amount of design and development time.

- Testing might reveal that development resources would be more efficiently spent fixing bug A instead of bug B or feature Y.

- (Or, in some environments, it might make sense to concede the battle in order to make progress in the war. "Ok, Steve, then how about I get started on a usability study for features x, y, and z for the 2 weeks following launch. Then we can focus our resources on what would improve [conversions / customer adoption / customer satisfaction / revenue / etc.].")

What's Your Story?

Have you had to convince management or your team to embrace usability testing? What happened? What worked best?

P.S. For a little extra help getting buy-in, you can also download our "Gaining Executive Buy-In for User Research" whitepaper.

Insights that drive innovation

Get our best human insight resources delivered right to your inbox every month. As a bonus, we'll send you our latest industry report: When business is human, insights drive innovation.