We Increased Our Conversion Rate 700% in One Month. Here’s How!

In today’s guest post, ZoomShift co-founder Jon Hainstock shares how user feedback helped his company increase customer conversion and improve their onboarding process. Enjoy!

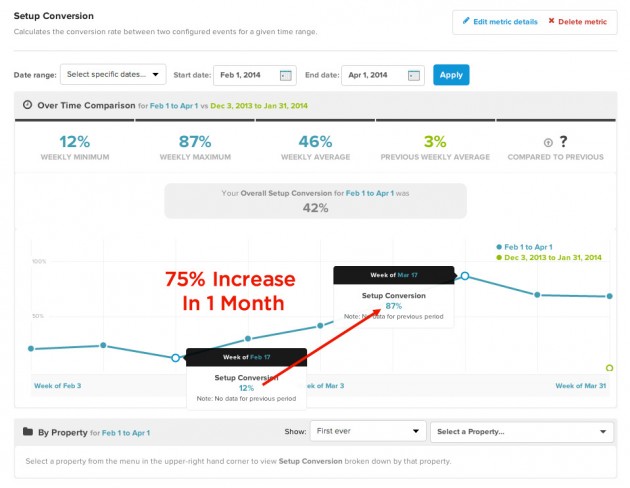

For SaaS startups, onboarding users is one of the most important parts of your business. You only get one shot to show potential customers how they will benefit from using your product. We learned this the hard way when we released a new version of ZoomShift and saw an abysmal 12% setup conversion rate. For us, this meant that 88% of signups never fully set up their accounts—abandoning before they could see the power and value of our product.

We found that the only way to truly see where people were getting hung up in the setup process was to watch them try to complete the steps. We used UserTesting to get the feedback we needed to increase our setup conversions from 12% to 87%. That’s a jump of 75 percentage points, making it an increase of over 700%! In this article, I’ll show you how we did it – and how you can have similar success.

How We Set Up Our Before and After Tests

Before we started testing, we defined our goals. Here were our top 3 goals for running the initial study on UserTesting:

- Figure out the exact places people are getting confused in the setup process.

- Determine how long it took on average for someone to complete the setup guide.

- Learn which parts were working well in our setup guide.

I highly recommend establishing your goals before creating your study, because it will help you create a better test and help you focus on looking for the right feedback.

Our Study Setup

After we set up our goals for the study, we worked on our requirements. We looked for people that fit our customer profile. For ZoomShift, this is a person between the ages of 30-50 who is a business manager or owner and who makes less than $100,000 per year. We found that the the more specific you make your customer profile, the better your feedback will be.

If you don’t know your customer profile, take some time to interview your customers and create a buyer persona. Then come back to your study and set it up for your ideal customer.

Our Study Tasks

We used broad tasks for the user with very little direction because we wanted to hear what they were thinking if they got stuck. Once they finished the tasks, we asked the following questions:

- What frustrated you most about this setup guide?

- If you had a magic wand, how would you improve the setup process?

- What part of the setup guide was the easiest to complete?

We made sure that the questions we asked were aligned with the goals for the study. For example, with this study, we were only focusing on the setup process, so we didn’t distract users by asking them to attempt other tasks. If you take the time to make sure your questions strongly relate to what you want to learn from the test, you’ll get the most helpful feedback.

The Results Of The First Test (Before)

We received a lot of valuable feedback from our first study. A few of the tests were brutal to watch, because some people had terrible experiences. We realized that we were making too many assumptions in our setup guide, and we needed to hand-hold people through each step if we wanted them to see the big picture. We also learned that presenting more than one thing at a time can be overwhelming to users, so we stripped everything out of the setup guide, including navigation to other pages (even if we thought they were helpful).

The Results Of The Second Test (After)

We used feedback from our initial test to make adjustments to our setup guide, and a week later we ran another test. This time when we asked our testers to go through the setup guide, they all completed it quickly and easily in around 5 minutes.

We pushed these changes to our signup process and saw conversions go up immediately, rising from 12% to 87% in one month! Now that our setup conversion rate has leveled off, we can spend more time A/B testing verbiage, layout and color with quantitative metrics in KISSmetrics.

We saw a 75 percentage point increase in conversions after incorporating user feedback.

Conclusion

Running user tests on our setup guide was one of the most valuable things we’ve ever done because we learned how to help people see the value of ZoomShift quickly. When done well, you can increase your conversions and learn a great deal about your users.

If you have any questions about our tests, or have any success stories of your own, please share them in the comments below!