The Top 10 User Testing Bad Habits (and How to Fix Them)

We recently held a webinar with Dr. Susan Weinschenk, aka "The Brain Lady," on the top mistakes people make when doing user testing. In today's guest post, Dr. Weinschenk highlights these mistakes and their solutions.

User testing is a great way to get feedback from actual users/customers about your product. Whether you are new to user testing or a seasoned testing professional, you want to get the most out of your research.

It’s easy to fall into some bad habits, though, that can make your testing time-consuming, ineffective, or expensive. So here are 10 bad habits to watch out for when you are doing user testing:

#10 Skip the pilot

A pilot test is a user test you run before you run your “real” test. You run a pilot test so that you can try out your prototype, your instructions – it’s a trial run for everything. Then you can make any changes necessary before you run your “real” test. Sometimes your pilots go without a hitch and then it can be easy to think, “Oh, maybe next time I’ll skip the pilot.” Don’t skip the pilot!

#9 Draw conclusions after 2 people

It's easy to get excited when results start coming in, but you don’t want to start changing things after 1 or 2 participants. You need to wait to see what everyone else does or doesn’t do before you start making decisions. And watch out for the confirmation bias (deciding that you know what’s going on after the first two participants and then ignoring other data that comes in later).

#8 Test too many people

If you've done quantitative research before, you might be used to running studies with large numbers of people. Most of the time user testing is qualitative (with some exceptions). You aren’t trying to run statistical analyses, so you don’t need lots of people. 7 to 10 people will get you the data you need most of the time.

#7 Too many cells

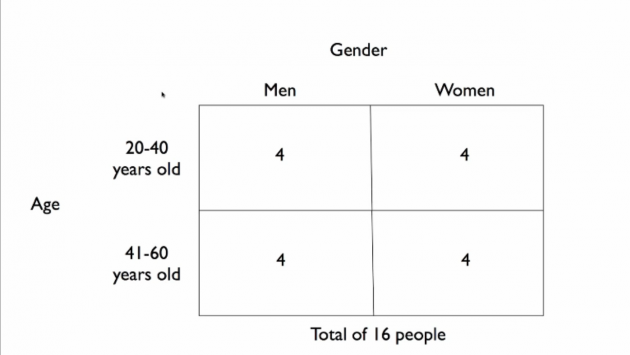

A cell is the smallest unit of categorization for a user test. Let’s say that you want to run your test with men and women, and you want to be able to draw conclusions about differences between the men's results and the women's results. That means you have 2 cells – one for men and one for women -- and you need to run 4 people per cell. You already have 8 people then.

Then you decide to add young people versus older people, so now you have 4 cells of 4 each.

Then you want to add people who are current customers vs. not current customers…. You can see that this is headed to too many people.

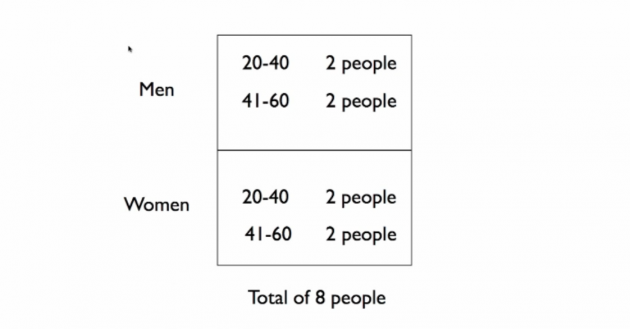

The mistake here is a misunderstanding between cells and variation. If you just want variation, then you don’t need all these cells.

If you want to compare the differences between men and women and include a variety of ages, you only need two cells.

Each group only needs to be in a separate cell if you are going to draw conclusions about the variables.

#6 Do a data dump

If you run the study, then you're familiar with everything -- the instructions, the tasks, the results. But if you just hand the data and the video recordings to someone else, they may be overwhelmed. You need to summarize the results, draw conclusions, and present a cohesive summary to your team and stakeholders, not just hand them a lot of data.

#5 Too much stock in after-test surveys

People have bad memories, and they also tend to over-inflate ratings on a survey. Watch out for putting too much stock in a survey that you give them after the task portion of the test.

#4 Test too late

Don’t wait till the product is designed to test. You can test wireframes, prototypes, even sketches.

#3 Skip over surprises

Some of the best data from user testing comes not from the tasks themselves, but the places that people wander off to or the off-hand comments they make about something you weren’t even officially testing. Don’t ignore these surprises. They can be extremely valuable.

#2 Draw conclusions not supported by the data

You have to interpret the data, but watch out for drawing conclusions (usually with your pet theories) that really aren’t supported from the data.

#1 Skip the highlights video

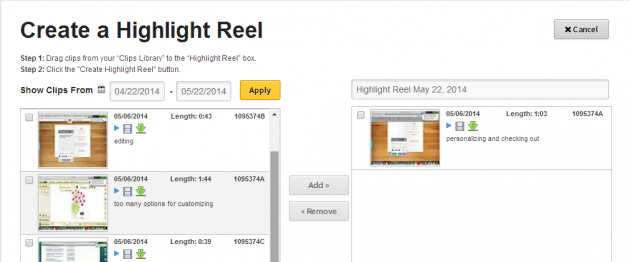

A picture is worth 1,000 words -- and a video is worth even more. These days, highlight reels made up of video clips are easy to assemble using tools like UserTesting.

In the UserTesting platform, you can drag and drop video clips into a highlight reel.

When sharing results with stakeholders, highlight videos are much more persuasive than your report of what happened. Make a habit of creating video clips the first time you watch the videos. Then you don’t have to go through them again to create a highlights video.

To learn more about these 10 bad habits, check out the on-demand webinar here!