In-person vs. remote usability testing: a UX design student's perspective

This is a guest post from Bethany Colden, a recent graduate of General Assembly's UX Design Immersive program. Enjoy!

As a student in General Assembly's User Experience Design Immersive, I've spent my summer eating, sleeping and breathing UX. The course has taught me how to lead the entire UX process, from research through design, testing, iteration, and final deliverables. This is the story of my experience using UserTesting to validate the design of an Android app for my final class project.

Like any good user-centered designer, I'm completely hooked on usability testing. The moment after a usability test—even a test in which I discover that my prototype needs major changes—never fails to give me a buzz of excitement. It's the whole reason I practice user experience design—to understand and design for users' actual experiences.

For the first few projects in my course, I conducted in-house usability tests. It was fun to connect with real people. I enjoyed reading participants' body language and other subtle reactions. But in every class project, it has been a huge challenge to recruit relevant users.

UX design projects are not famous for ample timeframes

Our class projects have been one to three weeks long, and each round of usability testing has been completed in a day or two. The testing process looks like this:

- Scrambling to find participants who meet your target criteria

- Hoping they're available to meet on campus during a narrow window of time

- And—of course—hoping they'll show up.

I've had multiple users cancel or simply not show. My housemates are tired of standing in as last-minute test participants! And my work has suffered for not always being able to interview actual target users.

Take my current project for example

Two classmates and I are working on a pro-bono project for a small nonprofit that gives free Android phones to low-income individuals in Seattle. The program pays for the first few months of the smartphone plan, and the phones can be used to apply for jobs and connect with community resources.

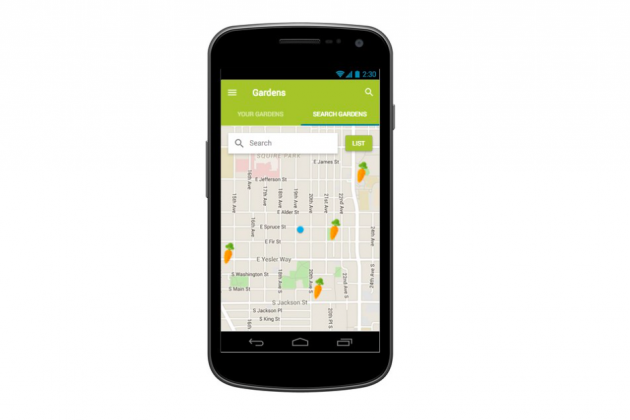

We're designing an app that will come pre-loaded on the phones. The app helps users connect with their neighbors and access affordable, healthy food, by getting people involved in community gardens.

The garden locator screen in the Community Garden app

The struggle to recruit users for in-house testing

For the first round of usability testing, my design team and I spent an entire day calling local food banks and shelters to recruit participants, with minimal success. Our client also recruited participants, but after multiple cancellations and reschedules, he tossed his hands in the air and told us, “I had no idea how hard it is to recruit people.”

In the end, we wound up interviewing our client's friends—only one of whom passed our screener as someone 18-45 years old who earns under $35,000 per year. With many design decisions, we had to question whether we were serving our target users. Our data couldn't accurately tell us, because we hadn't actually tested the app with our target users.

Remote testing to the rescue

For the second round of usability testing, we tried UserTesting. It was the first time any of us had conducted remote testing. We had built an interactive prototype in Axure, and it was well-developed enough that we didn't need to do much hand-holding, so an unmoderated remote test was just right. We wanted to test how clear and useful the functions were, and how easily users could navigate the app.

Identifying usability issues

The remote participants helped identify confusing parts of the flow. At several key screens, we asked what they expected or wanted to see, and they suggested features that we wound up incorporating into the next version—like a link to Google Maps that gives users directions to upcoming activities.

Despite not being able to read subtle cues like body language, the remote test results provided rich data. Best of all, we knew the data reflected the needs and experiences of our target users, because UserTesting recruited participants who passed our screening requirements.

Validating our concept

We had been unclear whether our target users, who often work multiple jobs, have young kids, and/or are in school, would be interested in taking time out of their already-busy lives to volunteer at a garden. Many of our UserTesting participants were indeed interested in signing up for activities in exchange for fresh produce. They were enthusiastic about the app. That gave us important qualitative data that we simply couldn't get by interviewing the higher-income users we had managed to recruit locally.

To recruit six local participants and conduct six in-house usability tests would have taken my team days. Instead, we went to lunch, and came back to data from six relevant users. Talk about a post-usability test buzz!

Tips for remote user testing

For fast-paced projects, unmoderated remote testing is a fantastic way to validate usability with minimal time investment. I'm still a proponent of in-house testing for complex prototypes, and for picking up those subtle cues we can catch only in person. But remote participants still provide very useful insights.

The only problem we encountered was our own over-eagerness. We ordered our first test with six participants, but forgot to test with a pilot user. By the time we watched the first video and discovered that one task was confusingly written and the prototype had three broken links, the other five sessions had already been completed. Don’t forget: always run a pilot test!

When we tested the next iteration, we clicked “Create Similar Test” and were able to edit small details from our first test. It was really simple, and once again, we had great data before we could finish lunch. As a student team designing for a small nonprofit, we don't have the resources to pull off robust in-house usability testing. For teams like us—or any team on a short time budget—remote testing is a very useful tool.