Fulfilling Brand Promises Through Human Insight and Design

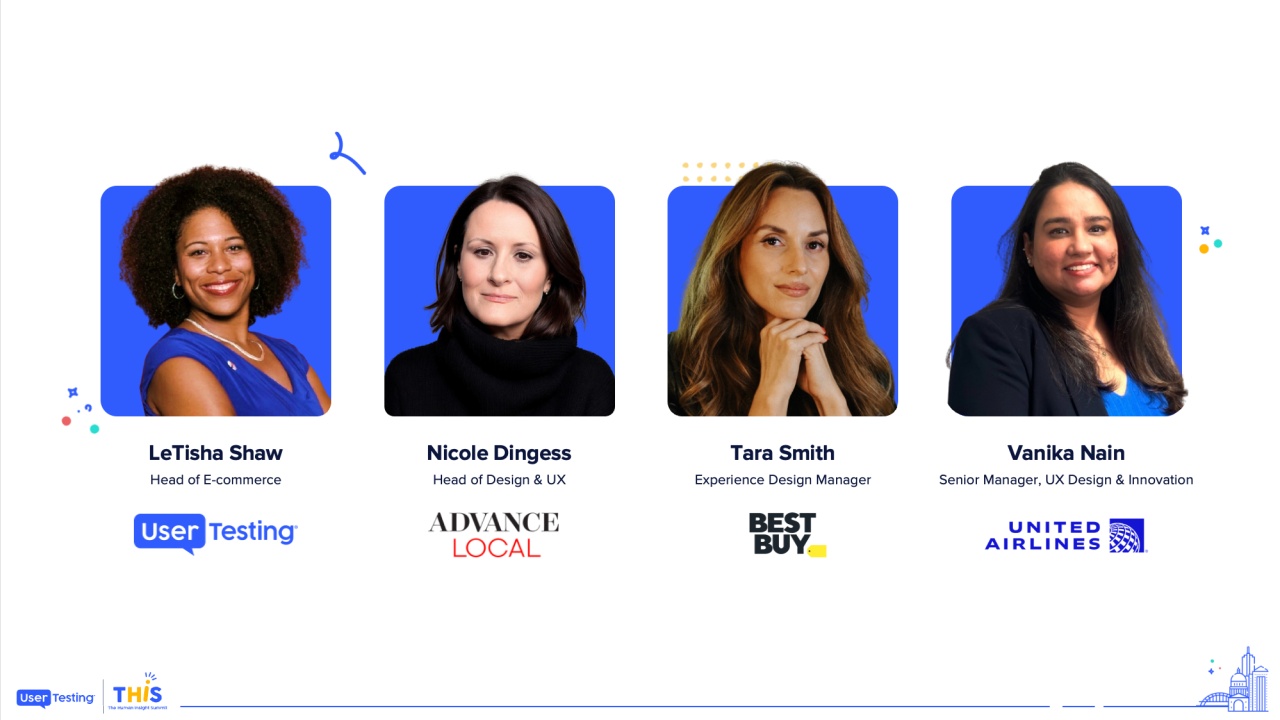

Remya Ramesh

Design Leader, Global Tech

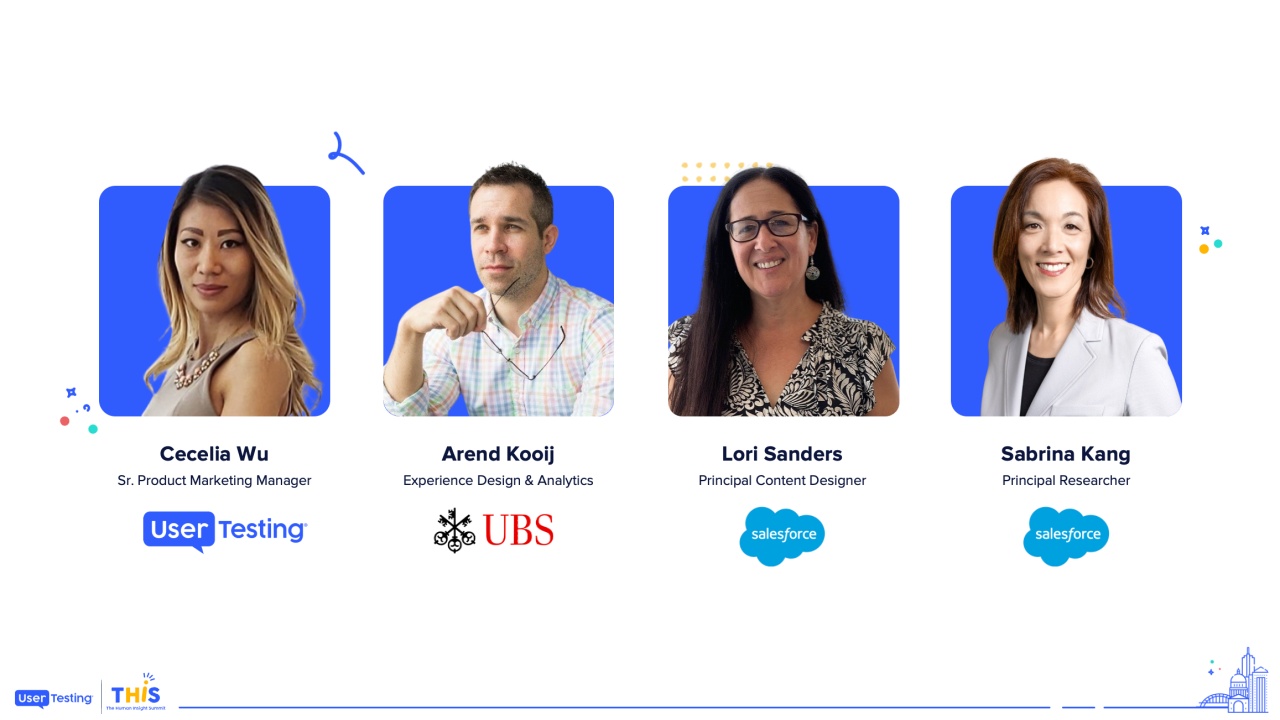

Stratis Valachis

Sr. Product Design Manager, Gousto

Peter Yeomens

Lead User Researcher, Wise

Baran Erkel

Chief Strategy Officer, UserTesting

As organisations grow, maintaining a strong connection with the customer—and consistently delivering on brand promises—becomes increasingly complex. In this panel discussion, cross-functional leaders will share how they are enabling more informed decision-making, driving innovation, and ensuring every product, service, and experience remains true to the brand. Join this conversation to learn how top enterprises are operationalizing customer centricity through insight-led design and collaboration.

Alright. Hello again, everyone. I hope full stomachs don't mean exhaustion, and we've got a really exciting, you know, next set of of speakers coming up. If anyone had the brock the cauliflower buffalo cauliflower, it was exceptional. I didn't expect it to be, but very, very good. So thank you to everyone who crafted that experience.

We are going to take a time now to move into a panel discussion about a challenge many of us are facing today. How do we consistently deliver on our brand promises, especially when our organizations are continuing to grow and scale?

We...

Alright. Hello again, everyone. I hope full stomachs don't mean exhaustion, and we've got a really exciting, you know, next set of of speakers coming up. If anyone had the brock the cauliflower buffalo cauliflower, it was exceptional. I didn't expect it to be, but very, very good. So thank you to everyone who crafted that experience.

We are going to take a time now to move into a panel discussion about a challenge many of us are facing today. How do we consistently deliver on our brand promises, especially when our organizations are continuing to grow and scale?

We will talk to a group of experts that are leading the way on how we use human insights and design thinking to stay aligned with our customers, make smarter decisions, and truly craft experiences that win hearts and market share.

To lead us in this discussion, we will bring up my colleague, Baron Erkel, the chief strategy officer here at User Testing, and he is going to be joined by a fantastic panel from members from Gusteau, Wise, and one of the world's leading technology companies. So, Baran, over to you.

Thank you very much, Nikki. Hello. Hello.

I am Baran Arkell, Nikki Said, chief strategy officer. I am really excited to lead this panel, of exceptional speakers to talk about customer insights and innovation.

So we'll talk about kind of three main topics. One is how to scale insights across an organization.

Another is how to cross functionally drive impact.

And then, lastly is using customer understanding to innovate.

Ultimately, what we're here to do. As a kind of bonus topic at the end, we'll, of course, talk about AI in the year of our Lord twenty twenty five. We have to do that. So, I am joined by a fantastic group of panelists that I will introduce and then welcome onto the stage here. So first, Ramiya Ramesh is a design leader at a global technology company.

Stratus Valachis, senior product designer design manager at Gusto.

And Peter Yeomans, lead user researcher at Wyze. Welcome to the stage folks.

Please give them a hand.

Thank you. Thank you all for joining us. I know you're all very busy.

Busy days in several of your companies as, as I learned when we were chatting. We're excited to have you here. Let me just, start by asking each of you to just introduce yourselves a little bit more. Start with you, Ramiyah.

Sure. Hi, everyone. I'm Ramiyah Ramesh, currently leading design for one of the global techs based in London. And before that, I was based in Australia, running design organizations anywhere from small startups to scaleups to larger corporates.

And I've been very lucky to use your product and introduce it to a lot two of my last companies that I work with. So, yeah, very excited to use it. And that was one of the times where I felt like I used a product and I can actually communicate the research insights with my stakeholders very quickly. And and we had the right insights to make the product direction.

So now I don't get to use that anymore. We we have our own sophisticated tools, but very excited to be here and and learn all the cool things everyone is doing in the community.

Thank you. Stratus?

Awesome. Hi, everyone. I'm I'm Stratus. I'm leading the product design and user research team at Gusto. And, we we've been using user testing and user Zoom for many years now, and we've had great success at learning at pace, learning early, and as a result, achieving great commercial results and linking the insights to that success. And I talked a little bit about that last year, and I'm I'm excited to share more about it today too.

Yeah. Thanks for having me.

Thank you, Stratus. And Peter.

Nice. So I'm Pete. I am one of five lead researchers at Wise. I've been working with user testing for about eight, nine years now over energy at Just Eat at Wise.

So I'm a serial implementer of of the software. And I, yeah, I'm I've got a typo in my name.

I'm also ADHD, which, explains why I probably gonna look like I'm thinking of things for the first time because I literally am. And, yeah. So strap in.

Apologies for the name typo there. Could you just tell us also, what Wise does just for those of my numbers?

Wise is an international money movement platform. We enable customers to hold and move money in many currencies around the globe.

Fantastic. Thank you. So I'd like to start off before we dive into our kind of our three main topics, just a lightning quick lightning round. Maybe something that, folks might not expect about your your role or your organization.

Okay.

We can we can go we can go to Stratus and Peter.

I've kinda hinted at it. So as well as being a researcher, I look after the global neurodiversity network at Wise.

I have the privilege of of helping many of our colleagues to make sense of their own neurodiversity or, in fact, their children's neurodiversity, as they make sense of that world for themselves.

Yeah. We were just talking about that beforehand.

My son was just diagnosed with ADHD, so I was getting some advice, life advice from Peter.

Welcome to the tribe.

Thank you. Thank you very much.

Stratus? Something that they might not expect.

Yeah. Just Yeah.

Yeah. Yeah.

I think that one thing is that, we, I guess, for for scale ups like Gusto, sometimes people might expect that research is is not the forefront of everything and that you have to make the case for it, but that's not the case with us. So we're very fortunate to to get by to have buy in from leaders all the way up. So all the conversations we're having are, more very healthy that we're geeking out about how we can do better, how we can do research better, and and bake it into our processes in a more effective way rather than whether we we should do it or not.

Yeah. And I think that, that's not the case for scale ups always.

Then, yeah, that's a great thing that I really enjoy about working, at Gusto.

Yeah. Scalents need to move fast. Right? So yeah.

My role, I operate in a cross functional metrics organization.

My role is head of design, but I often find I'm, like, a diplomat. I need to go and get my agenda done, trying to get multiple different organizations of product areas to agree on a common solution and try to scale it. And it's not easy, because each of the organization or the product area have their own metrics or goals they're trying to build and and ship. And here I am coming from a central team trying to figure out how do we ship this in a scalable, effective way. And I always find that it's it's more like diplomacy and and more than like a design leadership.

Fantastic. Thank you. I live in Washington, DC. So when you say diplomat, I think of, the license plates that identify them and how, aggressively they drive in my city. Very different story.

I'm sure that in your organization, you drive much more more smoothly and and and A lot of bombs along the way.

Manage cross functionally.

Awesome. Thank you. Okay. So first topic will be about managing insights and research and really how we organize our people, teams, and organizations to do that effectively. So, there are different approaches as we know, centralized, federated or distributed, hybrid, everything in between, matrix, etcetera.

So there's no one right answer. I just wanna hear some experiences. So maybe Stratus, starting with you, you know, what have you seen work well when it comes to scaling insights effectively?

Yeah. That's a great question. And as you said, you know, it varies per organization. Right? It's not there's not a one size fits all solution. But, I think there's two key things that we need to keep in mind. The first one is to think at a system level.

You need to embed, insight as what success looks like. So it looks like a different parts of of the processes, whether it's the ongoing OKR, quarterly OKR work, or where you do more transformative initiatives.

If you don't define success as requiring insights, robust insights, then it's gonna be really hard to see the impact you want because you're gonna be relying on individuals going above and beyond and going above in the incentive structures. So work with leadership and ensure that, you know, at a brief level for that for a new initiative to be signed off. Is there robust insight underpinning that that hypothesis that you have? If not, then it should not proceed. Right? And in the same way, if you start evaluating your hypotheses before you start building, having insights that give you a strong signal should be part of that process.

And that makes things a lot easier. Now, of course, at companies like Gusto, the conversation is around how you can do that better. In other companies that are maybe less mature and they they don't, you know, know the value of research, you know, you have to prove it by doing. Start with a small, problem to solve.

Put it through a user centered design process and then show the impact on the back of it. If you can invite people to observe and see users using it, if they don't have time, just play it back later. But it's really important that you you prove it by by doing stuff and communicating the impact. So that's the first one.

It's the thinking at a system level. And then the second thing that has worked really well for us, Augusto, is to tie it to commercial impact. Very often, I see teams that follow very rigorous user centered design process, and a lot of the success that the company sees is because of it. But that that that doesn't get highlighted, in the organization.

People are not aware of it. And there are very easy ways to do that, to to make the link between the two. So when we started this design ops, initiative last year at Gusto, we focused, for example, at learning faster, learning earlier, speaking to customers more often, and we started measuring those, those activities. And then when we started doing that, all of our squads started seeing great results, especially one of them, the retention one.

So more than three, x year on year improvement on the commercial results. So, obviously, we started doing something differently in the way we work by learning more about our customers earlier and more often. And then there was a commercial, success in a lot of our squads. So we just started reporting on that.

Right? Reporting the metrics of of our discovery. And then also, you know, we were doing that alongside the commercial metrics and people could make the connection. Obviously, that could be correlation, causation.

Right? And it might not necessarily be only that. But in most cases, it is because you start doing something differently, and then good things start happening.

So if you tell that story in a very simple way by reporting back your, your research metrics in terms of ways of working, it can be very powerful, and it can solidify the presence of of insight into your system.

I think I would say those two things.

Yeah. System and tied to commercial results.

Fantastic. That's a great segue to my next question, that I'm gonna, hand over to Peter. So insights to results, super important.

Can't stop at insights. You gotta drive to decisions to then get those results. Right?

Peter, I I believe you've got a framework, you've worked up at Wise, that involves maybe two horizons. I'd love you to talk about how that informs kind of the planning process and this kind of connection to results.

Sure. I think, one thing to say as well is that when getting researchers in the building is the easy bit.

Changing the way that an organization makes decisions so that they are including that research is the hard bit, which is where your systems and processes make sense.

And part of that is, when an organization is maturing in in research, they often realize they need research too late, or they need to know something or a mental model or a pattern too late. So part of what I do wise with my squad is about looking far enough ahead to be able to gamble on creating the knowledge that the teams will need when they've so that I don't say, oh, can you wait six weeks while we go and do that? I kind of, well, here's what we think is roughly right. So it's about trying to anticipate. So we've got one horizon that is now, which is more evaluative and then another horizon that is more of an educated guess as to what the organization might need.

And as you said, it turns out the more you talk to customers, the luckier you get.

Very true.

Fantastic. Yeah. So the horizon language, I I I like that a lot because it kind of turns maybe research speak into language everyone can understand versus, you know, evaluative research becomes this is a time frame we're talking about, right, versus this other time frame.

Now switching to the role of cross functional collaboration in scaling insights. And, Ramiyah, you talked about, being in a matrix organization and being the the diplomat.

You know, whether it's partnering with products, marketing, design, senior leadership, why don't you tell us, you know, how you ensure that insights are kind of spread, heard, acted upon, and ultimately driving results?

I think it all starts with, how we position research.

One of the, I guess, things I've seen over and over is research is positioned as a service function, so we are here to serve x number of teams, but moving away from that model to more strategic level, that's how I try to position and saying how, leadership teams can use us strategically so that we can deliver value and business outcomes. So that's number one, to start from there. And very similar to what you're talking about, I do something like a portfolio approach. So how we look at seventy percent of our research are more tactical short term bets that we are working on.

So we have insights coming through and we can activate, act upon. But then I dedicate, you know, probably twenty percent of that to a long term bet. So things we know that it's gonna come up in first or second half of next, you know, next cycle. And then we have ten percent what we call foundational research.

That's looking at underpinning everything that we do from end to end. So that kind of gives us a bit of a portfolio diversified approach in doing, but again, it only works in larger kind of corporates and in our organizations, but we are near a smaller startup or a scale of very different story. You have one researcher or two researchers, you know, completely overwhelmed with a lot of stuff. So again, this model may not work.

It might work depending on the size of the organization and maturity of it. And the one that made it really stick for me and gained the cross functional alignment for me is, almost like, you know, insights to action tracker. Like, okay. We learned this.

What did we do about that? Did we change the product direction? Did we introduce a strategic pivot in, you know, in the way we are thinking about our our org or product going? So really having, like, a bit of, list or tracker somewhere that we can pull up from our back.

And when you're talking to exec, hey, did you know that we saw this drop off? And by fixing this, we were able to, you know, generate X million in revenue. Like, really trying to connect the insights and the action with the revenue at the bottom of the company. I think that really changes the game for research in most organization.

So these are the few tips and tricks I found along the way, but, again, lots of bruises, for the last twenty years to get to that point. But, again, it depends on the maturity and the, yeah, organization culture that we work in.

I love that insights to action tracker. Stratus, I thought I saw you maybe writing that down. It really relates to I don't know if that's what you're writing now, but it it reminded me of, of what you were talking about and and really, highlighting a connection to results.

Thank you. Peter, I'm gonna throw it back to you. You you have, I think, this concept of exposure hours at Wise, to help with this cross functional work that we do.

Yeah. Well, it's it's it's not my idea.

I ripped it off from Jared Spool, who who talked Those are the Those are the Those are the yeah.

So, you know, basically, the premise is that the more you talk to customers, the luckier you get, as I said. And that, part of changing the culture in an organization is is actually enabling people to get used to the idea of listening to customers and what working out what good looks like. So, that virtually every organization I've worked at, we built a system of doing regular research where we talk to customers and then go out. So every month we talk to six businesses from a different country.

And then I go out and find different product partners who need to know things and ask them what they like to know. And then we do it for them. So there's no barrier. There's no, can you wait?

Oh, we'll have to do a recruitment. It's like, I am talking to these people. What would you like to talk to them about? And then they see somebody who's kind of vaguely competent at talking to people, and they, and they can get answers to the questions that they have.

And what's happened in the org is that design and product are now thinking in that monthly cycle and preparing things ready for me to show them or for us to ask.

And where we're taking that now is that I've realized that we've all, those of us who are researchers in the room, we've all had one of those projects where you've had a multidisciplinary team around you and and you discovered something together. And light bulbs have gone on and people have made connections, and those are life changing, nature changing discoveries.

You can't scale that. You get one or two in a career that are like that. But what you can do for the people in the wider organization is create that sense of discovery.

So my former life before I started pretending I was a researcher, I was a teacher, primary teacher.

And I'm quite interested in what learning is and how things stay learned.

And what happens is that you have to connect dots. So think of it like a breadcrumb trail where you have the light bulb moment. So even if you can't get lots of people with big job titles and expensive time around you to do all of it on the off chance they might meet something, you can curate those breadcrumbs so that the light bulb goes off for them And that then stays remembered. And then you've got insights that scale because they become part of organizational folklore.

Wonderful. I imagine being a primary school teacher, has really helped you, prepared you well to be a, managed with difficult stakeholders.

I don't know about that.

Expectations.

I was very lucky to be, to escape, casework at the civil service and move into UX research. The head of UX that asked me to move said you've been poking around in people's heads since you were twenty three.

You you know how to do this thing. You just don't know what it's called. What it's called?

Have a go. Go. Yeah. Thank you.

Stratus, I wanna come to you, as we kinda close out the first section here on managing insights and research and how we organize around it. Maybe double click a little bit into the how.

I think you've talked in our prep, time around using mixed methods and the power of mixed that.

It's okay. Yeah.

Power mixed methods and and kinda helping with this, organizational, alignment.

Yeah. Yeah. Of course. Yeah. So, yeah, one aspect about working cross functionally is ensuring that your insights are being used, but, of course, user research has limitations.

Right? We can go very deep into the why behind behavior, but, if we wanna quantify how widespread that is, it's more difficult without launching larger scale studies that are logistically, you know, challenging. So what's very powerful is to work with other functions and triangulate between different types of insights to get a richer understanding of the customer. And a simple example of how we we do at Augusto is, when we define opportunities, we start by working with analytics team to to see different behavioral clusters.

For example, the menu, we, we we saw that there's a certain group of customers that they spend a lot of a lot of time on the menu more than others significantly.

And also that has an effect on their conversion.

And, that's what we knew from analytics. Obviously, this is just what behavior that they do, and we don't know the why. And then we use the customer ID from that cluster, and then we schedule lots of interviews with them to go to the root cause to understand the need that drives that behavior. What we found out was there that this group is people that have FOMO.

They were telling us that they just I just wanna, like, see everything. I wanna I'm afraid that I'm gonna miss out on the on the recipes that, that might be good for me. And because we increased our recipes to, you know, five hundred a month, they were getting overwhelmed recently because they couldn't go through all of them. So that was a great insight of I mean, a great example of triangulating both of the quant behavioral stuff and the the quality why to identify that the problem is how might we help those customers feel that they find that they're not missing now on the best recipes for them without having to go and do the arduous task of reviewing everything. It's a very simple process of looking at the data and then going into the why, but it's a lot more powerful than just relying on what we can offer. I think mixed methods and triangulating between different types of of insight is leads to much more rich understanding most of the time.

Absolutely.

You say it's it's a simple process what you follow, but I don't think it's often easy to do that within organizations just naturally, right, because of our structures and silos. I I talked to a lot of enterprise leaders that know their insights being generated across different parts of the organization, but still chasing that elusive kind of combined view. Yeah.

But on that, it's very important to keep it simple because the, you know, the more complex you're making it, it's a lot less likely that people will adopt it. So another thing that we have at Gusto as a principle is try to remove cognitive load from ourselves as much as possible.

Mhmm.

And that leads to action, more action than if we don't do that. So, yeah, that's another little tip from what works with us for us.

I love it. Thank you.

Last, question, Ramiyah. This will be for you before we go to the kind of next section here.

So during the pandemic, you were at Kohl's, and you've had experiences both, I think, with federated and centralized models here. When you were at Kohl's, your team went from, I think, ten to a hundred and twenty in that period. Very rapid growth. How did you ensure the customer voice was kind of central to the decision still, you know, being made during that change?

I mean, for those who do not know about Coles, Coles is Australia is one of the top, supermarket chains, over there. And I was very privileged to be in the head of digital role during pandemic.

Fun times. Suddenly, you're in long lockdowns in the country, and, supermarkets become the new essential service.

You know, people are locked up at home, and they wanted to order, online. And we as a brand wasn't ready. We we really preferred, you know, come in store. Our store experience is amazing, because we are a traditional hundred and fifty year old institution with so many brick and mortar stores across the country.

But, digitally we were much, much lagging over the, you know, that period of time. So the good news is people are spending more. We we had investments. I was able to scale the team from ten to twenty designers.

When I said designers, it's product design, service design, digital accessibility, UX research, content design. So it's a large team.

But the first thing when you scale an organization of that size, it gets value to list of voice per customer. So, how do we how do we make sure that as you're scaling, the insights are scaling with it or the systems are scaling with it?

The challenge for me was I didn't have people to start with. I inherited a team of ten when I came into that position. So, a lot of the work we're augmenting through design agencies, so I plugged them in as my research capability or my product design capability, while I'm building out the team on, on, and then figuring out a way on how do I weave them across the company to introduce new services like click and collect, which never existed until that point, and we are doing research when the whole country is in lockdown and you can't get out of your home more than five kilometers radius. So,

logistical nightmares you can imagine. So this is where tools like user testing came to help, where you didn't have a lot of physical, you know, opportunities to do usability testing, because previously we were all used to bringing customers in our little, you know, UX booth, and we were testing stuff, and this is also my privilege time where I was able to use your product and use that as a journey to scale the insights and bring that to life.

I think another key part in it is how do I constantly share user insight in senior research and leadership? Not only research, like senior exec sessions, so every time when we are meeting on a weekly basis, we share, here's what we learned from the insights this week. So constantly bringing that insights into the leadership, you know, decision making, I guess, forums was really key. We also introduced exposure hours like you were talking about, and depending on your role, the level you are in, you have certain targets to make.

I mean, in course literally, we have to work physically in a store to be able to understand how the store team members are operating in, and what can we learn from them to simplify their back end process, so we can actually have ninety minute click and collect. I mean, previously it was like, you know, you have to make an order, wait for half a day before you can collect your order, but we were trying to figure out a way on how can we reduce it. And for that, that immersion and that exposure was super key to be able to get there. We worked in stores, you know, stacked the products to make sure that we are learning through that experience and bringing it back to life.

So I would say it's a range of methodology, whether it's hybrid, like mainly it's federated and centralized. Federated way you're embedding your research in different teams so they can operate fastly and and shift things quickly, But you also need a rigor and consistency from a central point of view, which is where the research ops functions sit and making sure that you have the tools and and, you know, you have the process in place so that you can scale the insights and and the process in place.

Great. Thank you. What a what a time to be in that institution.

Very privileged.

Because I don't think a lot of design leaders get a headcount from ten to a hundred and twenty. I I don't think I ever saw that number before.

A privilege, and I'm sure a lot of pressure and a lot of hours too. Absolutely. But it was great to hear, you know, to summarize, I think user testing helped feed Australia during the pandemic was my take. No. I'm kidding.

I'm not alone. I can't come. I don't want to get in trouble.

I'm not alone.

I don't want to get in trouble.

I'm in my statements.

So now switching to our kind of second topic related, but but a little different around how we influence, really widely across organizations and drive impact, across. So, Peter, I wanna go to you. I know you, you know, it's not just about insights influencing solutions, but it's really helping even shape what the problem is and the question is to to tackle in the first place or solve in the first place. So I think you've got a sixty forty rule. Maybe you can talk about with us.

Yeah. So I this was an agile coach that I met years and years ago. He was, I don't know, some sort of punk relic who, and and he just said that you you have to operate this sixtyforty rule, where you do what people have asked you to do in sixty percent of your time.

And then what they should have asked you for in the other forty. And you don't need permission to do that. Just work fast. Don't let perfect be the enemy of good in the work that you need to do, so that you can go off and just do the stuff. You do not need permission for that. You can just ask for forgiveness if you need it.

And just going back to the pandemic, the kind of I think that shifted the dial in what what research looks like. I was working at Just Eat at the time.

And being able to sit with people virtually in their homes and chat with them whilst they were ordering their food, and see a bit of, like, a small window into their living room was something that we would never have got if we were in a research lab. So every methodology that has got its own version of weird, like dragging people into a lab in a white office in London. It's odd. It's it's not normal for most people. And actually now, a Zoom call is more normal than than anything else. So you get an insight like that.

Awesome. Thank you.

And Stratus, can you talk about how, research plays a role in in, opportunity identification in your process?

Yeah. Yeah. Of course. I think it's, going back to what I said before around, like, going deep into the why of behavior and then getting actionable problems to solve.

Because, you know, if you do an excellent job at solving a problem that doesn't exist, nobody cares. Nobody's gonna benefit from it. So, as part of, how I describe what what product design and research offer to organization in a very simple way, I said that we learn and we make. We learn about our customers, about our problems, about their problems, and then we make things to solve these.

And also the things we make act as a vehicle for us to learn more and then go back into the learning, like this virtuous cycle. So in terms of, you know, delivering what the business needs, in terms of learning, the first thing is, you know, the generative understanding what the problems to solve are. And then, of course, there's the evaluative part, which is to find the right solutions to solve those problems and get strong signals.

And, yeah, I will just give you an example of how before we were doing research in a robust way of an opportunity that was not very, helpful. So we had observed from analytics that some people when people were ordering we were batch ordering Gusto, they tended to, to stay for, say, longer.

And there was no high opportunity statement saying, how might we make more people batch order? Because that helps retention. But that that's not a good opportunity statement because there's no problem there. There's no insight apart from the fact that and, you know, what we're gonna tell how we're gonna solve that, Telling customers, please batch order because it helps our attention.

This doesn't really, it's not really good. Right? But then by diving deeper into the reasons, the reasons was that, you know, Augusto sometimes when you don't when you forget to order, to select your recipes, you get a box with recipes that Gusto selects from you. And very often, those recipes are not what people want.

It's hard to get it right all the time. Right? So that was the reason why peep people those people were returning more. And the real problem there was how can we might we help people being aware of the non chooser experience or not, get the recipes that they don't want, and or something related to that instead of just looking myopically into a specific data behavioral data point of, like, oh, the batch order, they retained less.

And that has really, really improved the way that we, we define the problem and the impact we see on the back of it.

So, yeah, just going into the why that drives the behavior is is the key thing that has really improved how we do opportunities.

Fantastic.

Peter, I'm gonna go back to you and kinda talk a little bit about speed and pace, and then come to you, Ramiyah, similarly. So how do you see human insights playing a role in driving innovation especially when you need to move the business needs to move fast. You need to move fast.

So I think that's always been one of the joys of of working with you guys has been in fact, I have for the last week.

We're trying to settle an internal discussion about whether we should use the French name for for a product in France or whether we should stick with an anglicized version.

And internally, we had one view.

And I was able to do some unmoderated set set show a colleague how to set some up, so that they could do it. And they were thinking, you know, it was going to be like a couple of weeks before they get the answers and all that. There's one already in.

And being able to show people that they could do it fast and then taking a scaffolded approach, having shown them how to do it, say, right, you do the next one, and you can check-in with me if you like. Right. Off you go and get on with it. Which means we can scale our research impact more, by raising the quality of what other people are doing.

So that's one way that we look at speed. The other is this regular research means that colleagues are only ever a month away from being able to get something in front of somebody appropriate to get some sort of feedback on it, which we call prototype facilitated research. So it's not usability. It is here's some stuff to get the customer to talk, and then we can see how they react to it.

Yeah. And then sometimes it's just about prioritizing and just being able to I don't know. Apparently, nobody else really does this. It's only ADHD people that do this. But usually, you could find a way of wiggling something in, and then there's this word that apparently I don't really understand. No.

So yes. So consequently, I look a bit haggard today because I've said yes to too many things. And somehow they all it just fits. Particularly if you're prepared to sacrifice how much you do and how much control as a researcher you have over it. Yeah. If you're there to enable someone else, you can enable more people to move more quickly.

Mhmm.

And what I found is that generally, researchers who are generous with their time tend to end up with more power and influence.

And the ones who say, oh, you can't do this tend to end up ignored.

Mhmm.

Some great lessons. Yeah.

Have the right tools, enable others to move faster and ruthlessly prioritize maybe to Buy user tested.

Buy use tested.

We didn't plant that but I'm doing some shameless bloods here.

I'll buy you a drink later, Peter.

No problem.

On me. You get me some socks actually. I forgot.

We used to have socks. We might still.

Rumiya, so look, you gotta balance foundational understanding deep deep. You know, you talked about the ten percent and then the need to move fast.

You know, when you dig deep and, like, when do you just say we gotta move forward with enough insight?

I I think it depends on what you're trying to achieve. Right? I think in some cases, you are potentially building a one way door. So you need to figure out what is good enough means for that particular, project or, you know, what would that means.

Again, doing that cross functionally, coming together and everyone have a slightly different perspective on what that means. So understanding that even during the project kickoff kind of really helps us to figure out what is good enough, what does crappy research means in this particular context. And in some cases, we might need to do a balance of both. We might need some scrappy, like, gorilla kind of testing back in the day or some more, like, long term, you know, multi half kind of project that we need to gather insights.

But also up to your point on how we partner with, other functions like analytics to triangulate that insights to create a bit more actionable stuff. In my personal opinion, UX research as much as we love to do what we do, but often combining that with data science or data engineering or product growth kind of really accelerates what we are able to ship, and it really increases our shipping velocity in in that sense. So I think that really helped. Yeah.

Yeah. I think those are the two things, and I talked about the portfolio approach as well, seventy, twenty, ten. Yeah. That really shows us, like, we're not a blocker.

We are here to help you make decisions quickly, and we don't make any, expensive mistakes. And I was saying, I think, during the lunch break to someone, I worked in an organization where the founder thought they know their customers really well, and and they were really, hesitant to take any insights that were coming from research, and eventually I saw that product getting launched and spectacularly failing it after eighteen months. It's such a painful exercise to go through, especially for a smaller startup. You don't have enough funding, and the first thing they cut was research.

But it was so pivotal in that company's journey to be able to create a product that customers really want to use and that could have retained them could have brought much more revenue for them. So, yeah, it's it's about learning from those and making sure that we we try to not replicate.

Yeah. Yeah. I love the it's a simple concept, but the one one way door, two way door, and and just bringing that kind of language into an organization whether you use that language or not. Just the concept of we're gonna upfront as a group decide what kind of decision or question we're tackling.

Yeah.

Makes the whole process much easier.

Yeah. Oh, even asking yourself, what is the worst case scenario of this getting it right or Yeah. Wrong. Like, even understanding and aligning, like, I think some companies do pre modem then. I think that's also useful exercise to start with and building from there. And research plan kinda almost informs, okay, how much time do we have? What can we do within that time frame?

Fantastic. So on to our last, kind of section of of, questions here around driving innovation. We got about nine minutes, left here. Stratus, I'll I'll pass it to you. So, I guess, where do you look for signs that an idea is is worth investigating or maybe worth testing further?

Yeah. I mean, there is, obviously, it depends. Again, that's the whatever designer always says. But, we I'll make it again practical with some an example from Gusto. So we have those things called win initiatives that are big transformational things we wanna do that go outside the regular OKR quarterly work.

And, in the past, it used to be that, you know, people high up had an idea. I'm like, oh, let's do that. And let's when we did it. But, since then, we we introduced, we we call it a new develop product development process that we have some state gates.

So as I mentioned before, in the discover in the definition part, we need to have robust insights that underpin it. And then if we don't, it doesn't get progressed. But then again, when we move to the next stages of discovery and definition, what good enough looks like depends on what the nature is and of the of the initiatives, the one you wanna learn. So, for example, there are certain things that if they have to do with, like, new pricing and stuff like that, you know, but it is really hard to get insights from user research.

Right? You know, do you would would you buy the product or we made it? Like, we'll give you fifty percent discount or another type of discount. That's not very strong.

Right? So in those I think and back to what we were talking about before about the speed is about identifying the riskiest assumptions, the most important thing you wanna learn, and then finding the simplest way to to learn it. And that that's how you move fast. And people if they buy in, then this is a risky important thing for us to learn, that we can get the usability wrong.

Or if you wanna explain how Gusto works a subscription, a new type of subscription, that it's important that people understand it, then it becomes clear to everyone. We get buy in. They're like, yeah. Of course, we need to test and get assigned whether people can understand it before we spend so much time in engineering work and opportunity cost of launching it for a month and waiting to hear back.

So it's exactly what we're trying to learn, what's the riskiest. People care about it then, and then it's about how we can get as fast as possible for that specific assumption. So that that's how we, we operate, I guess, both on the win initiatives, but also on the on the OKR, like ongoing work. It's the principle of assumptions and the minimum viable way to validate that assumption and and that research is not the remedy for everything.

As you said, in some cases, you might just need to launch something and measure the analytics and see whether the behavior happens because you cannot ask a customer, do you want a cookie? And they say, yeah. Well, I love cookies. That's great.

But it doesn't mean that they will drive behavior. Right? But if it's something that it's a difficult concept they wanna wanna see if they're actually able to use it, that's a lot more powerful and and research is a lot more pertinent for that. So do it on a case by case basis.

And I think that's how you build buy in both for transformational and for more, optimization tactical work. Mhmm. Mhmm.

A bit of prioritizing, like Peter was talking about, but in in a different way. Prioritizing on what to focus on in that specific, assumptions in that specific challenge. Exactly. Love it.

Peter, you, you talked about, maybe going proactively to stakeholders and bringing them into research, which I really liked. What are some other things organizations can do to drive research, you know, as a part of the innovation pipeline and not kind of like after the fact validation, which sometimes happens?

So I think there's two things. At Wise, we're really lucky that the CPO's first question in any situation is, what do our customers say? Which puts everyone in a situation of having to have an answer to that.

Ask me the question again because it's run away.

No problem. How do you drive how do you make sure research is part of innovation and, you know, get it get avoid the trap of just, hey, go validate this thing we're gonna already do.

So part of it is that Yeah. That culture.

And then So everyone tell your CPOs, CMOs, CEOs, customer feedback is key, is what you're saying.

Yep.

Yeah. And then, I think there's a really interesting model of how to how to make decisions called the OODA loop, which dates back to US military somewhere.

Observe, orient, decide, act. And there's these two phases, observe and orient, before you can make a decision to do a big piece of work.

And the observe and orient bit is is to kind of do something scrappy to indicate that there is there is a thing we don't understand here.

Therefore, we need to invest a lot of time and effort in doing the work to understand it. So going and taking an initiative to find to kind of roughly get customer voice to define the problem helps you to create the case for doing the the larger work.

Fantastic.

Thank you.

Let's end on two quick innovation questions and then we're gonna get to AI. Gotta get to AI.

Cool.

Stratus to you, just what's you've given us some great examples. What what's maybe one more thing that's worked well in your org to keep insights kind of always at the forefront of of innovation and new ideas in in development?

Yeah. I think, is all the stuff that I've talked about already about, putting in the system. But another another thing is about showing.

Very often, a lot of documentation that companies have around how experiments and initiatives are are going lack that visual aspect of, like, here's what we did for the customer, just so showing the product and how it changed, but also linking to videos or or some kind of material that shows the users using it and benefiting from it. And those two things are very powerful. So in the past, that was not happening in the in the artifacts we're using to communicate. But now, we've started, embedding them a lot more. We have the screens that show the before and after prototypes that people can actually use it, even, like, stakeholders.

And also weaving in, videos links to videos. And, of course, it's, it's not something groundbreaking, but very often people forget about it and they're like, oh, yeah. It happens. It's too it's too much work.

And but but if you can automate and then create templates in a simple, like, process of, like, okay. Well, I have this interview clip link. I store it into the drive, and then I link it into a document when I need to. It becomes a lot easier.

It's an initially a little bit painful to create those little steps, but then it it just makes your work so much more visible across the organization, and people are actually, seeking it. Like, we I was in a weekly trading meeting that they were just talking about all the the trading, progress of Gusto. And since then, we've introduced okay. Here's the trading, but here's what changed the product that led to that, and here's what customers are saying.

And that that was a lot more powerful when people were like, oh, we need more of that. Can we make that as part of every weekly trading meeting at the end? So, yeah, I would say those two things. Show the stuff that you're making and show what customers are, how they're responding.

It's not groundbreaking, but often it gets missed. I would say don't don't let it slip. Just make it easier for you so that you don't let it slip.

Hundred percent. Don't hide the research. Yeah. Seeing is believing. Yeah. So help your your stakeholders see.

And apply, you know, the minimum viable solution. All also on the way you work. What's the easiest way you can do it Yeah. So that you're don't get overwhelmed with other things and distracted and you end up not doing it.

Yep.

And then also back to the sixty four zero, I guess, of, like, the the perfect is the enemy of done or good. Mhmm. Applied also to the ways you work.

Great. Okay. We're gonna jump to the AI question here. In the interest of time, I'll just ask each of you, give me one prediction about how AI is going to impact your work, your role, or your organization in the coming years or days within AI time.

Not only Anyone anyone who wants to jump in, go for it.

I'm sure this is a new topic. You've not not thought about it at all.

Story of my life.

Two things. One is AI agents and replacing, not replacing, but taking the excellent customer support function that most organizations have and allowing them to do the work on the specialist cases because the mundane stuff an AI agent can do. That's just inevitable. Yep.

And I'll come back to you with the other one. It's just run away. I'll come back.

Sounds good. Who wants to jump in next?

I think we're just all gonna be replaced and then, you know, get money from the government and do nothing and leave it for for You went there, man.

You just went there.

No. But just on a serious note, I think, you know, there's two two different, areas. Right? One is productivity.

Obviously, speed is gonna increase a lot by automating a lot of automating a lot of robotic repetitive tasks. It's gonna empower us to focus on problems more, which is great. And the second thing is the user experience. I think it will be a great way to help us bridge the gap between intent and action.

So you think about how we navigate the world. We want to express our intention of how something should be or what we wanna do. And then we either use language. We say, okay, at the restaurant, I would what are the more pure popular dishes?

Or or you use direct manipulation that corresponds to the digital or more to the graphical user interface. You're moving the chair left to right so you can sit or moving an image in Photoshop. So I think that it's not gonna in terms of the user experience, it's not gonna replace and make everything conversational. It's that it would enable conversation to be a viable mode of interaction.

So if for things that you can easily express through language, like, let's say, Amazon, Prime Video. If you wanna see, you know, horror movies, you can easily say, well, I want horror movies and then talk to to to the AI agent to get the ones you want or filter it a bit. But then if you wanna skim through them, it's a lot easier to use direct manipulation still to go through all the the different summaries and scan them instead of having someone reading to you or having a conversate conversation is not great for everything because sometimes the cognitive effort of expressing something through language is is hard.

So I would say that it would make conversational experiences more viable, bridging the gap between intent and outcome, but it would just sit on top of everything else that we're doing. It's not gonna eclipse, the direct manipulation and other modes of interaction we have at the moment, at least in the in the immediate term and for a few years. I don't know. In the future, maybe, they're gonna we can have Neuralink, and we're just gonna express our intention without even speaking.

But, yeah, before that happens, I think we'll just enhance be an extra modality on top of all the stuff that we have, we're using already. Great.

I mean, as you were talking about horror movies, I'm thinking you could make your own horror movie.

Yeah. Yeah. Yeah. Right? So that give you the future of entertainment. I don't think AI is gonna one to one replace us, but another human being who knows how to use AI will, like, will definitely replace us.

That's how I always think. Again, as of now with the information I have today, and I might change the statement in the future, but, I think the key thing to do here is, I don't know, right, this is my feeling, like, I think we are barely scratching the surface on the production of AI, I feel like. I don't know what type of job I'll be doing in five years from now, and I always wonder how can I evolve with the technology and its going? But sometimes it's also exhausting, because you're constantly, like, your brain is like, what am I missing out?

What do I need to catch up on? And it's a constant backlog figuring out how do I find, how do I learn, but also how do I then bring those learnings into actionable piece in my day to day work or my personal life. So, yeah.

My two takeaways is one, I think a lot of people get scared by saying that AI is going to replace you, but I think I think for my, for that statement my answer is it's not an AI directly, it could be an AI powered human being could replace you because they know how to use the, you know, improve agent engines that we talked about. And second part is for all of us thinking about biases that involved in AI, it doesn't matter what we use. I know we talk about using AI to cut short our research time or significantly improve our design process. But at the end of the day, you needed to still apply human kind of empathy and connection and and really judgment to say, is this the right call? Because that bias exists on all of us and the models that we train will have those biases as well. So how can we make sure that we don't lose our human side as we are going through this new evolution? So that's something I deeply care.

Great. An optimistic view of the opportunities. I love it. I love it.

Peter, did you wanna add anything close to that?

If you're a patent follower, you're gonna be replaced.

If you're a patent maker Love that.

You will thrive. Rule breakers will be the future.

I love it. I love it. Thank you all very, very much for this insightful awesome conversation. Please warm round of applause for our panelists.