Panel Discussion: Delivering on Brand Promises Through Human Insight and Design

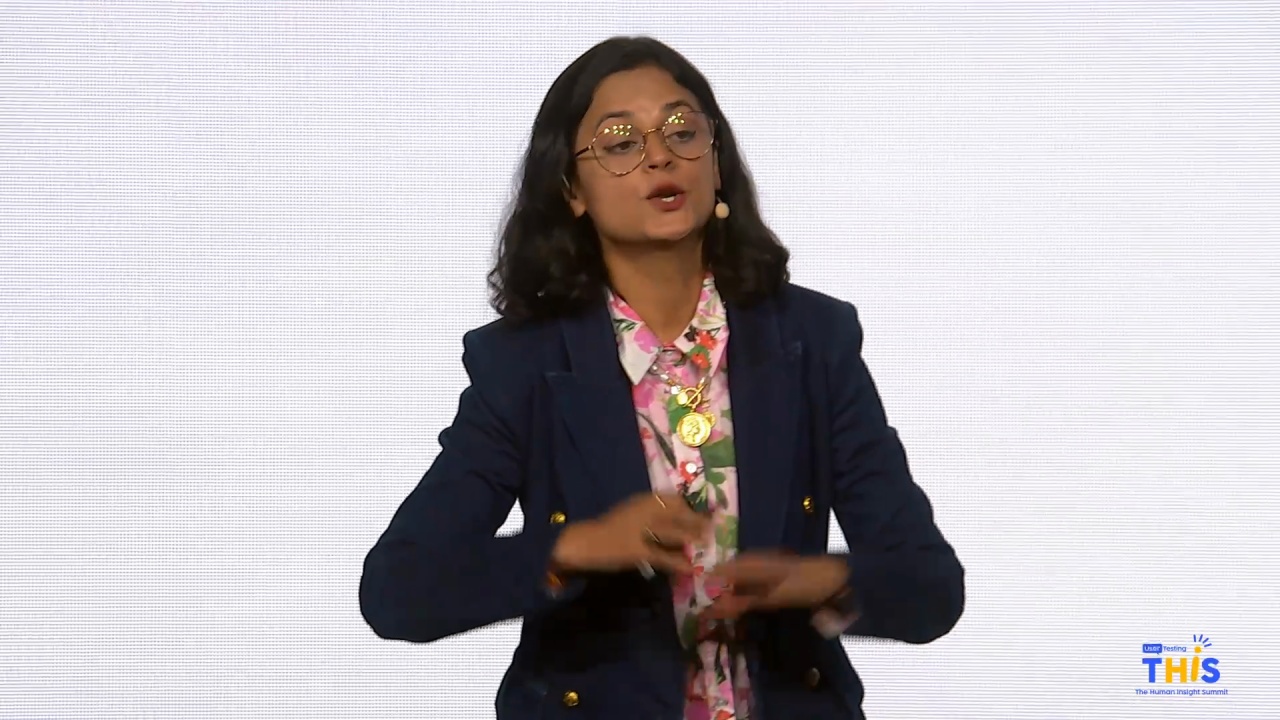

Amy Stillman

Vice President, Product, Cedar Systems

Dan Van Tran

Chief Technology Officer, Collectors

Danny Blatt

Senior Director CX Research & Behavioral Science, Pfizer

Michelle Engle

Chief Product Officer, UserTesting

As organizations scale, staying connected to the customer—and consistently delivering on brand promises—becomes increasingly complex. In this panel discussion, leaders from Collectors, Cedar Systems, and Pfizer will share how they’re driving smarter decision-making, fueling innovation, and ensuring every product, service, and experience stays true to the brand. Join this conversation to learn how top enterprises are operationalizing customer centricity through insight-led design and collaboration.

We're gonna kick off next with a panel, and it's focused on how to consistently deliver on brand promises.

And so we're gonna hear from teams from cedar, collectors, and Pfizer on how they stay aligned with customer expectations, make smarter decisions, and build experiences that are true to their brand. So leading the conversation, we have Michelle Engel, our chief product officer. She brings clarity and focus to everything that she does, and somehow, I don't know how she does it, she still gets us to work out with her when we travel. So, Michelle, I think tomorrow morning...

We're gonna kick off next with a panel, and it's focused on how to consistently deliver on brand promises.

And so we're gonna hear from teams from cedar, collectors, and Pfizer on how they stay aligned with customer expectations, make smarter decisions, and build experiences that are true to their brand. So leading the conversation, we have Michelle Engel, our chief product officer. She brings clarity and focus to everything that she does, and somehow, I don't know how she does it, she still gets us to work out with her when we travel. So, Michelle, I think tomorrow morning might be a little early for me. But welcome, Michelle, to the stage.

All for you. Awesome. Well, it's great to be here. I'm excited to see all of you.

I'm Michelle Engel, the chief product officer for user testing. So I will be around. I love seeing some familiar faces, some new faces. So I'd love to hear your thoughts, what's working, all of those things.

So thank you for being here.

So let's go forward.

Okay.

Okay. So we have an amazing panel set up for today. I love panels. I think they're a great way to learn.

We have such amazing customers who are so generous with their time, willing to share and help everyone in terms of their own practice and how they can get better. And getting to know these panelists over the past couple weeks has been amazing. So I think you guys are going to love what they have to say and have some great things to take back. So, I am going to introduce them.

So first up, we have Amy Stillman, VP of product for SEDAR.

You guys come up.

Danny Danny Blatt, senior director, CX Research and behavioral science for Pfizer.

And Dan Van Tran, CTO of Collectors. Woo hoo.

Cool. Okay. So let's dive in. So, we have these amazing panelists with us today. I think we're gonna kick off by having each of you give a feel for your role, your organization, and what you're doing.

Cool. Yeah. Why don't you go first? Great.

So I'm Amy Stillman. I work at Cedar Health, and we're on a mission to make health care affordable. So we're a b to b to c company, and we sell into health systems and take over their revenue cycle management practice, which is basically a combination of an operational and privacy.

And then we help service their patients and send them their health care bills. And, really, with our mission, the idea and kind of why we're a little bit different is that, we believe that everyone should be able to afford health care, and so there's many ways to resolve your your bill. Doesn't always mean paying in full with a big scary red stamp that says you're going to collections.

There's lots of different avenues that that we try to help to make bills as affordable and easy to use. So yeah.

Awesome. Helps all of us in our lives. Go ahead.

Hi. I'm Danny Blatt. I am the senior director for customer experience research and behavioral science at Pfizer.

So at Pfizer, we're trying to improve the health of a billion patients a year, and it's really important that we think about their experience, both the end patient as well as the health care professional, and try and reduce the friction that they experience going through their health care journey to make it as easy as possible for them to get the health care that they need.

Awesome. Dan. Hi.

I'm Dan Van Tran. Apparently, I'm the odd one out in a lot of ways here. So I'm the CTO of a company called Collectors. We authenticate and grade collectibles, billions a month. And so if you imagine, what one and a half, two million trading cards look like in a building, it's, wild.

But I was called in the company was actually founded in nineteen eighty six. I was called in in twenty twenty one when they did a rebuild of the company. It was acquired to bring it from public to private for eight hundred fifty million dollars and we were brought in to, understand the technology and which was holding it back and what we could do to really unlock the scale.

The scarier thing wasn't the technology. It was the fact that all of their brands were number one in their space, and they didn't know why. And so that's what led us to user testing and to do more user research to really figure out how did this thirty five year old company get to be number one and stay number one when they didn't understand what led them there. And it's, it's been a fascinating journey.

Awesome. Well, I can't wait to hear more about it.

So to kick us off, let's do a little bit of a lightning round. Why doesn't each of you share, something about your role that maybe the audience isn't aware of or that could, be unexpected for them?

Dan, do you wanna go first? Sure.

Yeah. So I I covered a little bit about it, but part of the interesting thing about the collectible space and especially about the the company that we worked with is that initially, we came in with a hypothesis that the technology was really the thing holding things back and that the experience of the products, given that everything was really still based on carbon copy forms even in the twenty twenties, that that was really the thing holding everything back. But as we did more user research, the thing we found was that it was a little bit of that. We did more applications and more more technology, but really the the bigger thing that held, users back were some concepts that were built in that required a lot of expertise to understand.

So for example, when you're submitting, these collectibles, you had to enter what was called a declared value. This was just the insured value for for the item because we have to insure it during transit, but people didn't quite understand what to what value their collectible had. A lot of these were unique. And so what we ultimately did was shift it from being just a insured declared value to being automatically calculated based on the service level you provide, which is more understandable.

How quickly do you want the thing back? We will insure it up to this amount. If you wanna insure it more, you just change the time that it takes to come back. And it led to a tremendous amount of, influx of more submissions.

As people felt more comfortable with it. Somewhere between twenty and thirty percent more, submissions came in after that. But it was just a really, interesting, thing to come into thinking I'm gonna rebuild technology when it was really I'm gonna understand the people.

It's all about the people and understanding your user. Yeah.

So for me, one of the interesting things is that people often don't know who they are when it comes to their health care journey. So a good example of that is we have a medication called Paxlovid, which is designed for people with COVID, for people with at high risk of severe cases of COVID. And most people understand that that's what Paxlovid is for, yet they weren't getting the treatment. So we figured give them more information, really make sure they understand that it's for people in high risk groups.

But, actually, that wasn't the problem when we did the research. What we uncovered was that people didn't know that they were at high risk. They didn't know what the symptoms, what the what the indications were. So all we had to do then rather than marketing more about Paxlovid was market more about what is a high risk group, and then the right people got the treatment.

So sometimes it's just doing the research and understanding what people need and who they are.

Awesome. Great.

I'll kinda speak to at Cedar, we're we're a premium priced product and market, so we're expensive.

And we are expensive, and and we can be expensive mainly because of our results. We're really a value story for our customer.

And how do we get there? Like, what what does make what makes Cedar different from another bill, a hospital bill that you might get? And one thing is we we spend a lot of time trying to really understand our patients within their cohorts, within their regions, and and their behavior. And that not only helps us make our product twenty to thirty percent better than, kind of usually with the baseline that the customers on before, but it also is information that our customers want. Our customers value us as a strategic partner and helping them understand their customer segments. And so that's been kind of a shift is that we're we're not just about the product, but really the service of delivering kind of business insights and patient insights to help them make better decisions within the organization.

And that's been cool to see that. And, to the point, like, Jai, our director of research is here, we've actually been able to spin up, research credits and that our clients are actually asking us to solve more problems for them and getting more research and especially such a highly regulated space that it can be really hard to do research. They they see us as a strategic partner and answering other fundamental questions and are buying research projects, from us and kind of creating a little bit of research p and l for that. So I think it's not just that research funds our our product development, but also, is part of our strategic partnership and and why, folks choose to.

Yeah. I love hearing all of these examples of how you are delivering value to your business at its core, and, I think we're gonna explore that some more as we go through. So, okay. So we're gonna start by talking just briefly about structure, the structure of your organizations.

A lot of organizations handle research in different ways. They may have it as a centralized team. Others are more distributed. At user testing, we have a centralized team that then is distributed out, into our different squads. So to kick us off, Dan, could you talk a little bit about the way your team is structured and, how that is working?

So we ended up where, apparently, user testing is today, where it's centralized and distributed. So I guess that's a working model for a lot of companies. But when we first started, there wasn't actually a a product team, a design team, a lot of these fundamental teams.

That was part of the problem. There were only twenty people or so in tech, eleven software engineers, but a a thirty five year old company with seven hundred and fifty people, about to be twelve hundred people, thirty five years of stuff, products, like, three hundred web domains. It was insane, but no product managers, no user research, no no design. And so we had to bring those functions up quickly.

So the question is, where do you put user research? We wanted to short circuit the journey from the insights to the actual products we're building and the technical, direction we were getting. And so we put them together initially in the product and tech team.

However, over time, it became very clear that that was good short term, a very poor move long term because you want to separate out the user research function to be more ahead of everyone else to really be thinking about what are you doing next, how are users reacting to things, and then as a result, how do you pivot? So over time, we put the team into the marketing function, which already is thinking about customer acquisition and a lot of the future facing things, and embedded them, with the product tech teams to ensure that there's still that connection so that over time, we could still move quickly and take those insights and bring them back to the point where we're creating and, pivoting the the products and, the the road maps. But they still their home team is still the future focused team, which allowed us to really have the best of both worlds.

I like that notion of a home team and then the other team is a great great example. Amy, what have you seen work in your work?

We're similar in that we're centralized, and then distributed. I think the one caveat for us is that so our we call makers or technology side of our house. They work in squads, and so we do have a backlog specifically to work on the different product work and optimization. But we also have a separate backlog and and resources dedicated to the growth team, which is kind of more the future looking products as well as the customer insights that we serve up in our quarterly business reviews.

So that's worked worked really well for us. I think the other thing, though, we've done is that we think about how we scale the work from self serve to really democratizing research and making it available to everyone to then consulted. And then we have we try to focus researchers that are owners in embedded, on, like, our highest strategic value problems.

So we want a product designer to be able to test some copy by themselves. But if we're in LRP, long range planning, and we're deciding our two to five year vision, we want research embedded with the growth team to be thinking more about the customer value of, what we're delivering there. So kind of, different working models that Sendhil has distributed.

So let's explore that for a little bit. How do you make sure that the insights that are being generated from your team really tie back to the most critical decisions that your company needs to make. Yeah. Good tips there.

I spoke a little bit about long range planning, but I think that's really crucial as and kind of to your point of, like, how do you get research in front? How do you get research defining the problems and not just, making sure that the solutions are are, you know, executed and optimized? And so I think, like, figuring out who your strategic finance partner is, figuring out what that car goal is three to five years out, figuring out what the gap in your product strategy is, and ensuring that the questions that you're asking are backed in research.

And and having those insights is really important. I think then the other thing we do, just to make research, like, the brand of research well known at CDER, one of the things we really thought about is programs.

So what are those, it's almost like a TV show. What are those reoccurring segments that we have that kind of help research insights get distributed?

So for one of those, we have something called, like, voice of the patient that shows up in our all hands segment. So our all hands with our five hundred person company, once a quarter. We pop in and do some clips of users and some and some quotes I'm sorry, instant insights. And that's just helping the research the the organization also understand how to utilize research by seeing the work. I really believe that, seeing is believing. So seeing the work and having those public places, really and that packaging to make it, repetitive really helps us to make sure that the organization understands the value of research that we're bringing, that it's not just optimizing copy copy, but it's strategic thinking.

Yeah. And really driving that empathy for your customer down. Yeah. Yeah. And, Danny, I know you have a centralized organization. How do you make sure that you're driving that through the organization?

It's interesting listening to these guys. I've got a lot to learn. We could think about reorganizing maybe. But we are centralized under the digital team, which means that we so we're the customer experience and engagement team centralized under digital. And within our team, we've got research, which I lead, but then we also have a strategy team, a design team, and an experience effectiveness team.

So we all work together very closely, which is great because we're able to share expertise and really advocate for the customer.

But then when a internal client comes to us, whether that's supply chain or r and d or commercial and says, we have a customer issue we wanna solve, we're very disconnected from that business because we're very focused on our little home. So what we've done internally within the CX and E team is create what we call pods, and they're basically project teams that are assigned to each business unit. And each pod is led by what we call an experience lead, and that person is intentionally almost embedded in the business, and they become the conduit between the business needs, thinking strategically, as well as managing the customer experience team so that they make sure that we're delivering on their needs, the the the internal clients' needs, and the customers' needs. And then it really becomes their responsibility to make sure that the insights are acted upon and, implemented.

Yeah. I think one theme in there is no matter that structure, that model is making sure that research isn't an island. Right? It's a very collaborative function. It works with a range of different stakeholders.

So to that end, how do you make sure that the research that's being done is aligned with the product road map and execution?

Do you wanna start with that as CTO in the room?

Yeah. So we actually do a decent job of it. I think there are two overall ways that we do, that we try to incorporate it. One is the synthesis of the findings, after you do the focus groups and user research and making sure you do a roadshow, communicating that out all the way from the executives, which I always get in every company, but all the way down to the people doing the work so that they actually have full context into the why of what we're doing and why we're making certain decisions so that when they're actually pivoting in the implementation, they can make sure to keep those values in mind and make good decisions that are still in line and in service of what the people actually want or need.

But in addition to that, what we actually offer is the ability for people to come and sit in on these sessions, and so they can actually watch live or or hear through Zoom or otherwise what, people are actually saying in these groups so that they can hear and be like, wow. I never thought of that or that's a really interesting way to that a side effect of something that I'd built but didn't really think that people would interpret it that way. And then they would pivot, and it really helped everyone end to end to understand, the the whole, user journey as well as what people cared about. And

over time, especially because it started with only twenty something people in product and tech, we scaled out quickly. It allowed us as we scaled, end to end for everyone to truly understand what we were doing, why we're doing it, and what the users actually wanted.

Awesome. Great.

Okay. So let's shift gears a little bit to talk about less around structure and collaboration and more around how do you take all that research and insight and level up to be the hero in the organization as we know all researchers are.

So how do you ensure insight is influencing solutions and helping shape the problems that are worth solving? So not being brought in at the end when there's already a solution proposed or being deployed against the problem when it's not necessarily even the most pressing problem.

Danny, where does research play a role in in opportunity identification?

Yeah. I mean, you hit the nail on the head, though. We are far too often reactive.

Someone has an idea, and we have to test it and see if it works.

But we are trying to, you know, flip that script. And the way we're going about it is by doing fundamental research strategically, not asking for business approval, but knowing, okay. Here's a challenge that we foresee coming. Let's really understand what that customer's challenge is and then bring that to the business. So we've done that a few times now looking at, for example, oncology patients and caregivers.

What are their main challenges?

And even though the business wasn't asking us for for us to do that, we did the research. We uncovered pain points. And then when you put it in front of them, if anyone has any empathy, they're like, oh, these are problems worth solving. Takes a while to get the machine turning, but then it does and you start to see the improvements. So I really think it's about doing research at risk or strategically, to get to get ahead of it.

Makes sense. And when we talk about strategic, what comes to mind for me is your example, Dan, of how you came in and really, use research to help set that new strategy, what it should be for the next three to four years. So how did that discovery process influence what you prioritized, and how do you continue to do it in your day to day and in your organization now to differentiate the market?

Yeah. To be fair, at the beginning, we did it poorly.

And so everything was reactive. We really because we're coming in to clean up this organization, it really was like we were treating it as if we were start ups starting over again. But like a start up, we had a lot of great ideas and not much clarity into how to prioritize those things. And so a lot of the good ideas came in, and then people just react to it and start working on it and implementing it, much to a lot of themes during this morning with, like, very little actual research to back what what are we doing, what are we trying to get out of it, should we even be doing this now versus something else.

And so over the past four years, we've gotten much better at actually thinking about that and embedding user research throughout the entire, what we call in the software software development life cycle, the SDLC, so that every phase from the ideation all the way through to the post implementation, we have user research as part of it. And so for example, one of the things that we're doing right now is there are a series of, pretty novel ideas that we're looking at, and we're now, instead of even building wireframes completely or mocks, we're actually giving to the user research team and letting them figure out what should we do.

And in some cases, they're doing some user interviews. In other cases, they're taking old interviews and looking through the the data to help to prioritize and process things. And it's allowing us to say no to a lot of things or put things on backlogs that we otherwise would have run with, even a year ago. And so it's been very helpful, to to embed them closer throughout the entire development process.

I've heard that quote that, strategy is not just about what you're going to do, but what you're not gonna do. And I think that's key there.

I think for us, that that's been similar too, which is, like, sometimes you aren't invited to that conversation around the the what. But what what research has been really impactful is more about defining the problem further. So, like, our example that I would use here is, like, our AI we knew we were gonna build an AI voice agent this year. It's health care is in especially at the intersection of finance and so PCI and PHI, pretty regulated space.

We really needed to home grow a solution. We kind of couldn't count on the hallucinations that would happen, with something that we would buy. But so knowing that we were gonna build this thing, kind of that problem was already defined. But what we needed to focus on to get it right is where research kind of stepped in.

And we we actually said, let's back up and do just, like, a little bit of discovery research. And let's understand, like, based on other AI agents in the market, what is working and not working? So we listened to, like, Amtrak's voice AI agent and did research with folks, like, just using these other experiences. And we understood a lot about where people's tolerances were and that we realized that was gonna be so important to adoption for the AI voice agent.

Otherwise, everyone's just gonna think that it's a phone tree and be like, agent, agent, agent, live customer, like, get off the phone, which we still hear people do. But really figuring out, people's tolerances for AI agents is, like, three minutes or less. In the first thirty seconds, you need to give them a piece of value to get them to connect and realize that it's actually helpful and not just a phone tree. But some of those things helped our development cycles.

So it was less about, like, the problem space, but more about defining the problem of where we should focus. I think that research offered, like, a ton of value.

Yeah. I think that's really interesting because we do focus on the hallucinations that come out, but that idea that just prove your value quickly and try to get buy in. And even if they know it's AI, that they'll continue to use it.

So as you think about the tools or the secret tips that you have for how you build influence in your organization for those insights, what has helped those insights travel further for you? Amy, do you have an example?

Yeah. I think the, I'll talk a lot about I think a lot about, and Troy's done a great job of thinking about the packaging, again, of the research. So how do you get to the bite sized thing that everyone can kind of remember about that juicy insight. One of the best ones was, one of our designers did a piece of research, and Jaya kind of helped facilitate it.

But the bite sized insight that came out of it was, like, it's not rude from a robot. And so what we learned is that even though sometimes the digital channels could feel less personal, actually, when you're talking about something that's so personal, it's like a health event that's happened to you, and you might be in financial crisis as well as health crisis. We found that users actually preferred kind of the anonymity of, a digital channel. But that insight, it's not rude from the robot, like, still lives on, it's either.

Like, it's one of our things. So, again, it's like, how do you make the insight something that's memorable and usable so that others will say it? The best piece of research is if someone else is talking about your research versus you having to go and explain as a researcher what it means. Like, you want people to adopt it.

And so I think oftentimes we almost think about the research bite sized pieces also as almost as products.

Mhmm.

And so what is that product that we're defining in the brand around that, to help people Yeah.

Really anchor, and use this insight in their work.

Awesome. Dan or Danny, any other tips you've used to try to elevate business?

So I I love that, the idea of the bite sized insight and let them do it. We used to say at my old company, you wanna give people a cocktail conversation, like, something they can't not talk about? What I have found to work really well is to be super provocative in your recommendation, And then someone will challenge you and say, well, where does that come from? Now we're into the data, and now they're asking me to show them data where most people are allergic to data that you're sharing it with.

So you're starting a different conversation, and now it's about the recommendation as opposed to challenging the sample size or challenging the power, whatever it might be.

So start out by poking them there.

Absolutely.

Right in the eye. Got it. Yeah. How about you?

In a similar way, it's a lot of, we present the data, to back a lot of the decisions we're making, and a lot of it's inter infographics or other things that are provocative. And so, for example, one of the things the hypothesis we came into, with the business was that especially during the pandemic, there was a boom in the collectible space.

We anticipated that most of these were people who are what what's called prospecting, buying these players that are rookies and they're gonna be big players, the next Michael Jordan. And so they're buying all this up, hoping that one day they will be that, and then they're gonna make a hundred x profit.

And so one of the things with that, the data actually proved, from the user research was that that portion of our customer base was actually way less than fifty percent. I believe it was maybe even a third. And when we anticipate, that would be something like seventy, eighty percent, and that completely changed a lot of the prioritization of the road map, for the next two to three years. But as a result of that pivot, we actually then were able to address the demographic that did matter, which were the true hobbyists. And, a lot of that was just including the data and then showing the provocative stats, but then making it so that, like, here's the the, the scenario, and here's what we're gonna do as a result, and it really tied the story together.

Yeah. I think for all of us, we're we're in, I'm gonna venture to say, very competitive markets that's only getting more and more competitive kind of with all that's going on in the world. And I think that innovation is really where you can separate from your competition, from others in the space. And so I think it probably makes sense to shift gears, talk a little bit about innovation and how human insights play a role in the innovation that happens in your organizations, especially when it's about speed, experimentation, moving quickly.

Where how do you all move fast? How do you drive that pace in your organizations? And what are what do you look for for signs that that moment, that idea is worth building, and you're willing to ask for that investment or or push forward?

We're really into this concept right now around the MBT. So not the MVP, but the MBT, which is the minimum viable test. So what's the smallest thing that we could do to glean if this would have traction elsewhere?

If it's a mini AB experiment, if it's, like, a set of questions we can release to users, but really figuring out and it's stolen. It's like a first round capital. Thanks. I didn't confident. But, we plan a lot in our road map around what are the MBTs we wanna try right now. Okay. And how do we just get that small signal to know to do the future investment because speed is so important right now.

For us, it's it's not, like, a ninety day research project I could never imagine that we would even fund or do that. It's thirty days, ten days.

How do we just ship repeatedly?

Okay. Yeah.

How about you?

Or So because of our centralized model, we're so closely connected with the design team and the strategy team.

We're able to build and test iteratively really quickly and just throw things against the wall, see if it sticks almost, and then bring that to the business team. So we found that to be very successful. And, also, tying that with what I was talking about earlier, having the upfront research, it's not just a true guessing game. You actually know why things are working and why they aren't. So it's easier to bring that to the business and try and get that investment.

We actually, as mentioned before, we included user research in everything. So many of you have hackathons in your companies. We actually include the user research team in our hackathons. And as part of what they do is, they, we include the entire company.

And so anyone who has an idea can actually go with it to the user research team and start to test that idea in a a short period of time, and we'll assign engineers and, product developers. And people come up with the wildest stuff. And some of it sticks, but a lot of it, it it it just give gets enough buy in to say, okay. Well, we may not want to put this on roadmap yet, but we will do a little bit more.

And so, for example, with the bass past hackathon, there were about three or four ideas that made it all the way to the final round. We're a pretty big company at this point, two thousand plus people. But three or four of these ideas that were I think all of them were backed by user research, we are going to pilot them at, National Sports Collectors Convention, which is the national. Everyone has a national in their industry, but this is our national, and we're gonna see, for these ideas how well they, they work out, and then we'll pivot as a result of it.

And some of them are likely gonna make into the final roadmap, for even even this year. We may, move things out of the way to make room for these. But that's, again, it's the benefit of acting like a start up even though we're a big company, but a lot of it is just the power of the user research and, the what happens when you elevate it, in the company.

That's awesome. Great example.

So, Danny, when we first spoke, you mentioned, how critical testing was for not just your digital experiences, but also your physical experiences, that you at Pfizer have. How do you translate what you're hearing from customers into, design decisions, feature priorities, and how do you know you're actually solving the right problem?

So it for us, it all comes down to how we connect our research process. So I spoke earlier about, you know, doing the upfront research, really understanding the needs, barriers, challenges of our customers. We always have that foundation.

And then through our testing, whether it's digital or physical experiences, we're doing iterative testing, and we're understanding the results we're getting from that user experience iterative testing.

Is it solving the need? Why is it solving the need? Why is it not, etcetera. And we're always able to tie those things together.

And then finally, when products are in market, we're also collecting VOC data. And it's really the power of having one researcher who's responsible for all three of those research topics, bringing it together, and being able to deliver what we call, like, an integrated insight report. And you're always able to come back and say, you're still missing the mark. You're still missing the mark.

So, like, one example is we know how important price transparency is.

The customers have told us that. We're not delivering it. And in the voice of customer, they're telling us we're not delivering. Every report, we're telling them you need to be more transparent about prices. It's a regulated industry. It's very hard to be price transparent, But we know that that's a major need that we need to figure out how to solve.

Totally makes sense.

So let's talk about how organizations can ensure research is part of the innovation pipeline. So we talked about hackathons and those types of things, but kind of at the core of how your major investments drive the innovation of the company, and not just being used as validation after the fact. Like, we've already decided we're doing this. We, you know, just do it. Just build it.

What's one thing that's worked well in your organizations to keep those insights at the forefront when working on new ideas or driving innovation?

Anyone wanna go first?

We try to include as part of planning. Like, we try to package it up and make it easy and digestible. But, again, I think it's also kind of pushing it forward to that long range planning period, which is, if you really wanna get to the what's the three to five year plan and more about the what we're gonna discover, a lot of that is based on some research fundamentals that you can come into the room with, which are like, what are our core competencies? What are we really good at?

What's our competitive analysis? I think starting with that layer of secondary research to then pinpoint, like, if we are gonna go more into this, like, let's spin up a study around that and then being able to be fast. I do think, like, speed is such the thing there, especially within, these planning cycles. Like, there's a schedule.

You wanna be moving on it. There's not, like, a ninety day break to go do research. So how do you how can you work with, the tools that you have to to execute on that?

Makes sense.

Yeah. I I agree for I I agree completely. And for us, it's all about being flexible and adaptable. So what do they need right now?

What's gonna convince them? We have some internal clients who don't wanna talk about research unless it's n equals one thousand. And in this room, we could debate whether that's right or wrong. I think most of us would say it was wrong, but that's not the argument to have with them.

Other teams, they just wanna know the answer, and they leave the method up to us so we have that flexibility to do that. So I think it's about us flexing to what we know is gonna help make the business impact.

At Collectors, what I, really encourage, especially, being in the role that I'm in, is a lot of times in organizations, the ideas come, from the user research or the the executives to product and then tech, where the how, like, how it gets built.

So what I did was create a backdoor that's always open that people can go directly to tech and actually just talk about wild things. Like, whatever imaginative thing they might say. Like, imagine if we could do this with AI. I've heard that agents can do this.

What if we could do that? And so the user, what I encourage is the user research team to talk directly to the engineers to say, here's the problem we're seeing. What are some crazy ways that we could use current technology to solve this? And to be honest, most of those likely won't become real ideas, but in almost every case, there's some part of it that you can carve out and actually put into a real product or turn into something that's much better.

And so Hackathon is just one time, you know, one event that you can use to do that. We actually have recurring conversations. I meet with the head of user research at least once a month just to talk about these wild ideas. And I actually come to her saying, I hear some of the technological trends we're seeing in the industry.

And then she comes back to me and says, okay. Based on that, here are the problems. I see that overlap with that. And then we usually come up with something that then we bring back the product or the executives to get further buy in.

But it's been, a winning formula for us to be able to get a lot of these, these more innovative, thoughts back into the user research team and to empower them to actually get them onto road maps.

Yeah. I say that a lot in my organization. Like, the best ideas are not coming from product management sitting and talking to whoever they're talking to. But, you know, throughout the organization, people working with customers on a day to day basis, technologists, anywhere in the organization can have an amazing idea, and it's about how you can funnel that up.

In our organization, we also have a product strategy team. That product strategy team has one of our most experienced researchers on it. And so as different concepts and ideas, come up, she is the person sort of vetting it and helping to see where it goes. And so it may come in looking like this, and it comes out looking very different. But, it we definitely have kind of pulled that into the strategy side.

Okay. So we have about ten minutes left.

It would not be a panel if we didn't talk about AI. So we are gonna, sort of change things, talk a little bit about AI.

So when you're looking ahead, I'm gonna call on each of you.

What are your top or, actually, let's start for a second about how all of your organizations are starting to use that AI. So could you just share with the audience a little bit about what you're looking at in your organizations, what kind of guardrails you've put in place, and then we'll come back and talk about predictions.

So I'm in, again, the fortunate position of being the head of tech, and so and AI is really at the forefront.

And the other interesting thing about my organization is that the board, the CEO, no one's really pushing for us to use AI just because it's not an industry that is similar to traditional industries I've worked in. And so I'm the one mostly driving it, but it also means that I have a lot of flexibility and, I control the budget. So I can do a lot of things.

But as a result, we now have a company wide OKR that's around, infusing AI into every part of the organization through experiments. So So not to say that this is gonna replace people or do anything like that, but really, how can we make your lives better, so that we can take away some of the tasks that are taking up time and free you up to think about the bigger, more important things.

So that's one aspect of it. The other aspect is a bit more product related, which is, and it's the coolest thing. We just released it earlier this year. But the one of the dreams I had coming into this, and the technology wasn't, really there at the time, was that as someone who collects, I now have a lot of this subject matter expertise, over the years I've built up, and it's very nuanced. But what I would love is for a parent with their kid to go into a card shop, open up a pack of Pokemon cards, and just be able to scan through with their phone to say this is worth something, this is worth something. Set you know, they save the kid from playing with the card that's potentially worth a thousand dollars, things like that.

But also, like, a lot of us, you know, we inherit these collections or, we have friends that have, like, one off cards. To be able to just scan the the card to know what is this and what's it worth, we released that app earlier this year, and it has somewhere in, based on our data, like, an eighty five to ninety percent match rate. And the, the the price estimates are pretty accurate. And so people are now using this, but it's just, that again, back in twenty twenty one, the technology wasn't there. It's evolved enough where we were able to build these custom models to be able to do this, and it's just amazing what what AI can do.

Everyone's gonna leave and go look in there for good reasons for the things. How about you?

Yeah. We're definitely looking to apply AI very broadly. Of course, the challenge is we are in a very regulated industry, and if the AI gives poor health information, that could be a disaster on multiple levels. So we're taking a very calculated approach in how we do it.

From a research point of view, we're leveraging AI for the typical things that research organizations do. So looking at an insight tub, how can we take all of our historical data and reference it back easily or readily? And we're doing similar things in different parts of the organization, but being very careful not to make a mistake.

Yep. I understand that. I know that we're we're building I think of, like, the first thing that we build is really the foundation of the technology so that we can be really safe and really own, the product experience. But I'll talk more about our workflows. I think that's actually been more interesting for us is how it's influenced the way that we work with each other.

I am the the head of the product org right now, and I have product management design, user research, and data science. And each team is using it a little bit differently, and some of it is about solving their pain points. So for the product management team, they get pinged a lot of questions. We have we actually have, like, a bunch of new CMs as we're growing our portfolio, and, client managers asking questions sometimes about the product, where even, like, our best product managers, I'm like, you're being too helpful.

You're you're I see you all over Slack.

Like, less Slack enters. And so one of the things that actually, recently that one of the PMs did is they built a a bot in Gemini, a gem bot, and let it read, like, all of the product documentation and then created this little thing that, a Centimeters can ask a product question to, and it will go and find and answer that. And that's been so helpful as a resource.

Makes the CNs happier, makes the product managers happier, and we can kind of continually update. From the design side of the house, designers so, yeah, for PM, it was about solving a pain point that was, like, allowing them that was taking away focus. For the designer, I think it was giving them more leverage to innovate. I think as a designer, it can seem scary that design is gonna replace the designer and, like, the craft of design.

But so much about great design is actually about solving the problem and having taste to know which solution is going to solve it. So it was actually funny. We had, one of our design directors ask like, gave it a bunch of information, gave it our design system, and asked for, like, four concepts for a patient billing hub. And, we got four concepts back, and, like, two of them we actually know that from our AB testing went really horrible.

So I think it's just more of, like for for the designer, it's like, well, this is so interesting. If we use this as a source of inspiration and a starting point to then pick up the pencil and iterate on top of that, we're we've kind of energized our our iteration stage and made that quicker, and and we get to final concept faster. But I don't think it takes away judgment, and good judgment is the thing that we always wanna own. I think AI is just really allowing, the folks on my team to work at the top of their license is how I think about it.

So how do we help people do do more of that creative problem solving and thinking?

Awesome.

So great segue into our final question, which is around predictions And anyone And anyone could go for it.

There's a I feel like right now, there's still a barrier between AI and the I think about it similar. Like, Henry Jenkins is, psychologist. He talked about digital narratives versus digital immigrants. So the way, like, I use the Internet versus my mom not growing up with the Internet was very different Versus our kids.

Versus our kids. Yeah. I think AI is gonna be similar. I think right now, you see people using AI like Google and that we're asking it questions.

I think folks that grow up with AI are just gonna have different working patterns. Right? It's gonna be embedded within their flow. They're not gonna write a first draft.

They're gonna say, here are the things I'm aiming to do. Could you give me that draft? And now I'll I'll craft that. So I think the the more that you can make it more native within your style, I think the the closer it'll be.

I don't I I think that's also the hardest thing to adapt to and and changing your your behavior, but I do think there's a there's gonna be a shift there.

So I I think it's so overhyped right now.

I think I think it's amazing, but it's so overhyped. I think most of the promises we're hearing will not be fulfilled, but it's going to happen. I just I think I can't even it's technology is moving so quickly, evolving so rapidly.

I can't even imagine what it's gonna be in five years, but I think what we're promising now is not gonna be delivered.

It'll look better.

It'll look better. I agree. I think it will look better, but I think this is a lot of hype.

I'll need another forty minutes to go into everything.

I think the the the trend that we're seeing now, as many of you know, is, the AI agents. I hate using the word agentic. That's what it's called, agentic technology.

It if you're not familiar, there are the current LLMs and AI tools like ChatGPT. They're more workflow related inputs, outputs. Right? And then there's agentic, where you you feed it a bunch of data and you give it a whole bunch of tools, APIs, and you just give it a task and it figures out how to use that data and the task to accomplish the, the goal. And so a lot of people are building things out in this way. It's starting to get traction because what you can do is actually have the AI's police each other and bring each other up. It will never replace expertise until we get to, like, quantum computing or something.

But at the same time, it's gonna get much better. It's going to replace a lot of the tasks that we do today, and it's going to affect all of our jobs, not in a bad way, in a good way, but it's gonna change how we work. Similar to industrial revolution, all the other things as, in our current time, mobile phones, email, Internet. Right? But my hope is that instead of just using this to actually scale out the amount of work that we do and how efficient we are, that we take that newfound time, we do a little bit more, but we actually save a lot of that time to bring humanity back into everything that we do and reconnect people with each other, spend more time working as teams, partnering with each other, because the technology has becomes, even pre AI, such a big part of our lives and how we work and everything's behind a computer. Let the computers offload some of that work, and let's bring people back.

I'll all I can picture is, like, a conference room, bunch of people sitting around, and the the computer having a seat at the table as one part of that team, to balance it out. Well but thank you. Thank you to our amazing panelists.

That was great. I really appreciated hearing all of your thoughtful perspectives, and I hope all of us are, taking some tips away. So, now we're gonna shift gears into something a little bit different and very exciting.

So up next is a live recording of the Design Better podcast.

Host, Ellie Woolery and Erin Walter are going to be joined by Shani Sandy, the VP of experience design at IBM.

And they're gonna have a conversation on how experience led organizations are reducing risk and accelerating innovation, like we've just been talking about, through, competent customer centered design. So please join me in welcoming Ellie, Erin, and Shani to the stage. And once again, thank you to the amazing panel.