Why your ad campaigns need more than just an A/B Test

Up until my late 20s, I must’ve spent at least 75% of my time watching, playing, or just thinking about sports—and I wouldn’t be surprised if it was even more than that.

Nowadays, the percentage of my time devoted to sports is a lot closer to 5% because of my grown-up responsibilities, and with so little time on my hands, it’s hard to find time to even sit down and watch a game.

As a result, I’ve resorted to keeping track of my favorite teams by checking scores long after a game has been over, which unfortunately doesn’t tell me the entire story of the result.

A narrow win in a low-scoring game can be interpreted a number of different ways.

A 7-6 football game that looks like a snoozefest on paper could’ve actually been the result of two dominant defensive teams. A 0-0 scoreline could’ve been the result of two goalkeepers standing on their heads to make incredible saves in a soccer match or hockey game. A 1-0 baseball game could’ve been the result of a pitcher shutting down his opposition in a perfect game.

Without knowing the context of a result, it’s difficult to determine why one competitor won, and why the other competitor lost. The same can also be said for your A/B test results.

Why A/B test results only tell part of the story

Yes, the first step in optimizing your ads is to run A/B tests. As an online marketer, it’s something you absolutely have to do to make sure you’re getting the best ROI out of your ads.

In order to determine the winner of an A/B test, most marketers rely on the click and conversion numbers in their ads dashboard, then combine them with data from their analytics tools. After crunching the numbers and making sure results have at least a 95% confidence level in your results, you’re ready to crown a winner and replicate your success with the next test batch…or are you?

While relying on analytics may be effective in telling you which ad performed best, it’s not the most accurate way to determine why one ad won and the other one didn’t.

Why did someone click an ad?

Was it your compelling copy, or was it the brightly colored call-to-action?

Why did someone decide to submit their information?

Was it because of the placement of the form on your landing page, or was your offer just too good to resist? Was it a combination of these two things, or was it something different altogether?

With traditional analytics, it’s difficult to answer these questions, and that’s what makes user testing such a powerful tool to online marketers like you.

By watching and listening to the way your study participants interact with your ads, you’re taking all of the guesswork out of your analytics. Instead, you’ll have the cold hard facts you need to replicate the success of your A/B test winners and truly optimize your ads.

So where do you start? The same place that most of your users do—your ads.

1. Test why people are clicking your search ads

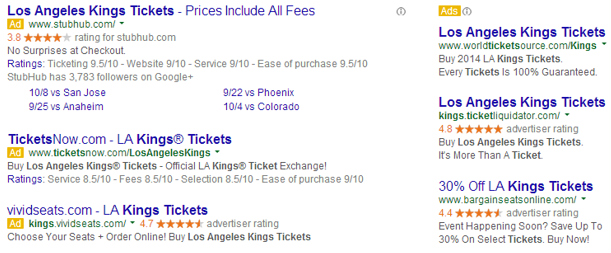

One of the first things to consider when user testing a search ad is its position. If you’re using a platform like AdWords, you know that your ads in position 1 and 2 will look very different than ads in lower positions.

AdWords search ads in positions 1-3 (left) look much different from ads in positions 4-6 (right).

If you were to set up a test between an ad that has an average position of 2.1 vs. an ad that has an average position of 2.9, you might end up getting invalid results. In this case, you’ll want to separately test your ads based on what they’ll look like in one of the top positions, as well as what they’ll look like in a lower position.

Once you’ve decided how your ads will look for your test, you’re ready to get into the details of why someone clicked your search ad.

If you’re following more traditional methods of A/B testing, you’re making incremental changes to your ads to isolate and test specific elements. The same approach should be taken when setting up your study.

Before your participants begin their test, tell them that their mindset should be of someone who searched for a specific keyword (your best performing keyword for the test group). This step is important since you’ll also be user testing the relevance of your ads.

Once you’ve given your participant the test context, ask them about the content of your competing ads. It’s here you’ll find out things like whether or not you should still be using title caps in your headline, how important the relevance of your copy is, and so much more.

With the results of your study, you’ll be able to adjust your ads accordingly and improve your click-through rate. Since higher CTRs positively affect your quality score, you’ll also end up saving money and improving your ROI, and who wouldn’t want that?

2. Test why people are clicking specific display ads

While it may seem different on the surface, user testing your display ads is a lot like user testing your search ads—except, you know, the part about your participants having to search.

Instead, present participants with your display ads using an image host like DropBox and make it really simple. Ask them to choose between two ads side-by-side and have them explain why they prefer one over the other.

We recently ran a test with these two ad variations, which encouraged folks to sign up for a free conversion rate optimization eBook:

After a few weeks’ worth of data, we found that the green banner was converting better than the blue banner, so naturally, we ran some user tests on it to find out why.

Our study results revealed that people clicked the green banner because of the brighter color, which we might have been able to infer with our A/B test results. But what our analytics couldn’t tell us was that participants liked seeing the word “free” in the CTA of the blue banner (though it wasn’t enough to convince them to click).

Based on our study, we now know that the next batch of text ads should probably have a bright background AND the word “free” in the CTA, and this is something that would’ve taken a lot of guesswork to deduce from our analytics data alone.

3. Testing your ad flow

While landing pages are part of the ad click-through experience, focus specifically on what happens between the time your users click an ad to the time they hit your landing page (if you’re looking for landing page optimization tips, this entry can help you get started).

When running a study on your ads, be sure to ask your participants what they expect to see once they’ve clicked on your ad. Then, once they’ve hit the landing page, ask them if it met expectations and have them explain why.

First impressions are important, and it’s hard enough to gain customer trust as it is. If your ad’s message doesn’t match your landing page, you’re giving potential customers a poor user experience that they’re not likely to forget.

For example, if your ads mention that you’re selling $69 computers, but the cheapest computer on your landing page isn’t anywhere near $69, you’re probably wasting clicks and hurting your credibility with prospective customers.By asking your participants what they’re expecting to see once they’ve clicked on an ad, you’ll have a much better idea of how to optimize your landing page, and you’ll have the evidence to back up any of the changes you’d like to make.

Always build in time to test your results

Like most online marketers, you’re probably moving at a million-miles-a-minute from project to project and A/B test to A/B test. If user testing isn’t already part of your process, it might be difficult to break the routine and add another step to your cycle.

Try not to think of it as an additional step, but as a supplement to analyzing your test results. Running a study on your A/B test results shouldn’t be done in lieu of your analytics data, and vice versa. Each set of data is meant to complement the other, so treat them like two interlocking pieces to a puzzle that helps you see the bigger picture of your A/B test results.