Pre-launch vs A/B testing: why waiting wastes time

What if your team could identify and dismantle the unseen barriers to audience engagement, long before your ads ever see the light of day and the budget repercussions of low performance set in?

For too long, the emphasis on A/B testing has left a diagnostic void in the processes of many teams. This often forces teams in a ‘reactive’ corner, and leaves them no choice but to rely on guesswork and gut feeling, after budgets are spent and opportunities irrevocably lost.

Imagine, however, a simple shift: one where you can anticipate campaign success, not merely react to its absence.

To understand how to pivot to a more proactive stance, let’s first break down the pitfalls of over-reliance on A/B testing.

The limitations of traditional A/B testing

Teams trapped in the traditional campaign launch setup of generating creatives, running split testing, then waiting for the results to make their conclusion—miss out on the crucial why behind each metric.

This is the invisible wall: the gap between what your ad says and what your audience hears or feels. Traditional A/B testing excels at telling you which variant won, but it often fails to explain why one ad resonated (or didn't) with real people.

Beyond that clear gap, the very methods your team sets up their A/B testing parameters could also return false or biased observations.

Here’s three ways that might happen.

1. You run too many hypothesis-based tests

Running too many A/B tests on similar things—such as variant creative elements or testing multiple price points—is a problem.

This happens because of how A/B testing is designed: there's always a small built-in possibility of a false positive, and the more tests you run, the higher the odds that one of those false positives will show up.

2. Elements in isolation get tested

Focusing too much on individual A/B tests can lead to optimizing only isolated parts of the customer journey, like a single ad or landing page.

This might improve one metric, but it can ignore how that change affects the overall user experience or long-term brand perception.

For example, an ad might perform well in one test, but if you don't understand the specific context of that audience or platform, applying those "learnings" broadly could fail.

What works on a highly visual social media platform might not translate to a search ad, and A/B testing alone doesn't account for these contextual nuances.

3. It may not account for actual user intent

An ad might get a lot of clicks, but A/B testing won't tell you if users clicked because they genuinely understood and were interested in your offer, or if they were misled by the ad and quickly bounced.

This means you could be optimizing for clicks that don't translate into valuable conversions.

Why pre-launch tests actually save time

Pre-launch testing with human insights is the process of getting qualitative feedback directly from your target audience on your creative concepts, messages, and ad components before your campaign goes live.

It's about understanding the "why" behind potential audience reactions, emotional responses, and comprehension, allowing you to refine your ads based on real human understanding rather than just assumptions.

In an Insights Unlocked episode, Kristy Morrison, a Senior Manager for Digital Intelligence and Optimization at F5, shared her thoughts on the importance of this kind of rapid customer feedback.

“Every good experience that you create with your customer is part of your brand,” she said. “So is every bad experience.”

Pre-launch testing saves significant time compared to traditional A/B testing primarily by preventing costly mistakes and ensuring campaigns are more effective from day one. Here's how:

- It’s a faster path to sustained high performance. Instead of waiting for live campaign data to tell you what's failing, you can ask users to articulate their immediate thoughts, feelings, and understanding as they view your ad, or even have them try to complete a task related to your ad's goal. This means less time spent iterating on live ads and a quicker path to achieving your desired performance metrics.

- Your ad or campaign budget is much more optimized. When you have a clear understanding of what resonates with your audience before launch, there's less risk of pouring budget into campaigns that are fundamentally flawed, freeing up resources for efforts that are already validated and poised for success.

- It reduces internal bias or false positives. By having real people articulate why they would or wouldn't click, what they found confusing, or how the ad made them feel, you get direct answers. This specific feedback reduces the need for trial-and-error testing in the live environment, leading to more informed decisions and fewer unproductive testing cycles.

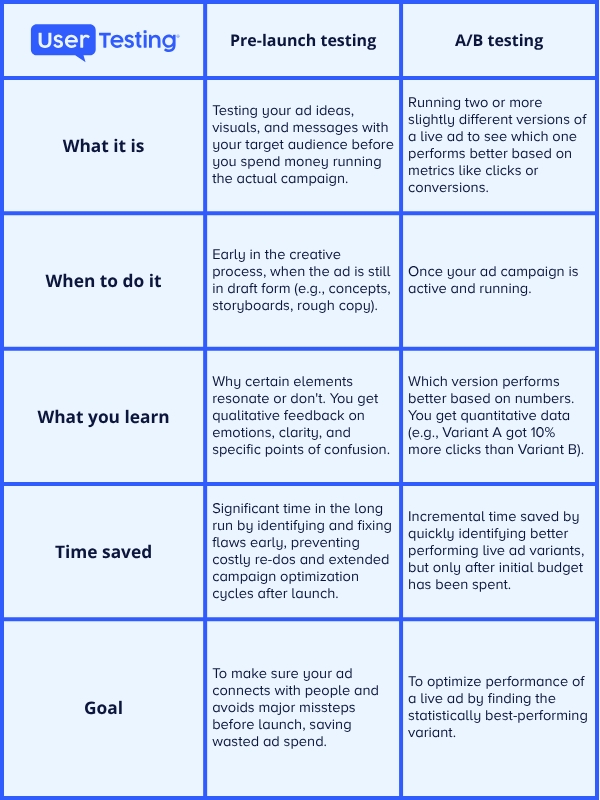

How pre-launch human insight testing differs from A/B testing

A/B testing isn't obsolete; it's just often misused. When deployed correctly, it absolutely still contributes to a campaign's overall performance.

On the other hand, pre-launch testing is your campaign's foundation. This is where you do the essential groundwork—bringing in your real audience to tell you, in their own words, if your core message is clear, if your visuals resonate, or if there's anything fundamentally confusing about your ad before it goes out to the world.

Here’s a fair breakdown to help you analyze both strategies more effectively.

How real-world teams can launch campaigns for success

Athletic Greens, the makers of AG1, understood the power of direct audience insight when rebranding their product and redesigning their website.

They used UserTesting—human insight platform—to get video-based feedback from contributors on their new packaging options, website copy, and even how to best describe the product's flavor.

For example, they learned that explaining the per-portion price resonated more than just showing the monthly cost, and that shifting marketing imagery to include more "normal people" was highly effective.

These insights helped them better communicate AG1's health benefits and value, ultimately leading to a 5% increase in online checkout conversion. By testing their brand perception, customer education, and content strategy with real people, Athletic Greens launched their refined messaging with certainty, driving tangible business results.

Shaping proactive campaigns from the ground up

The era of simply reacting to A/B testing results is over. To truly succeed, marketing leaders must embed real audience insights directly into their creative development process.

Ultimately, your ad budget is too valuable, and your time too scarce, to launch campaigns based on assumptions.

By integrating real audience insights early in your creative process, you’re not just reducing risk; you're building a foundation of certainty for every campaign.

UserTesting helps advertising and marketing teams test campaign assets before launch—so they can uncover blind spots, refine creative, and validate messaging with real audience feedback.

Whether it’s a display ad, landing page, or video concept, teams can make confident, insight-driven decisions that reduce waste and improve ROAS.

Key takeaways

- Traditional A/B testing has its limitations. It might reveal what didn't work after the budget's spent and the campaign is live.

- Faulty A/B test setups yield biased data. Improperly configured A/B testing parameters can lead to false positives and unreliable results, skewing your optimization efforts.

- Proactive pre-launch testing uncovers "why" early. By getting direct human feedback on your campaigns before launch, you understand emotional responses and clarity.

- Integrating human insights saves time and spend. Testing drafts like visuals and messages upfront identifies flaws when they're easier to fix, avoiding costly rework and wasted ad budget later.

- A/B testing can fit into your strategies after the foundation is built. A/B testing can help with incremental gains once strong creative concepts and messaging frameworks are built.

GUIDE