11 tips for effectively screening test participants

When you're conducting user research, you need to make sure that you're effectively screening participants. After all, the right participants are able to provide you valid feedback that can help make meaningful improvements to your design.

That's why you should screen participants for all types of qualitative and quantitative research, including moderated and unmoderated studies and contextual projects.

How can you effectively screen your test participants so you get the most actionable insights?

Most use a screener. A screener consists of a series of questions, usually no more than five, designed to help you assess whether a user is the right participant for your test. When using a screener, it's important to ask the questions in the correct sequence.

Need an example of what this looks like? Imagine you work at a pet food company. To get feedback on a new product that’s made for small and medium-sized dogs, you would only want to survey small and medium dog owners. Eliminating large or extra large dog owners will give you the exact insights you are looking for and would increase your research credibility.

Types of screener questions

Here are the general types of questions you may want to ask as part of a screener:

- Demographics: age, gender, ethnicity, location, and household income.

- Firmographics: job role, company size, employment status, and industry .

- Experience level with specific software & tools: An example question is: Do you have experience with any of these tools? Sketch, Invision, Figma, Framer

- The frequency of use: An example question is: How often do you clean your clothes?

- Tech Savviness: An example question is: What is your web expertise? Beginner, Average or Advanced.

- Device Types: What type of computer, tablet or phone do you use?

- Brand Usage: Which of these brands do you use?

Remember to disqualify participants who aren't a fit as early as possible. This will make the process more efficient but is also more respectable of a prospective participant's time.

11 tips for effectively screening participants

1) Keep the screener short and precise

Don't ask more than five questions, or participants may disengage and drop out. Asking too many questions, particularly when targeting niche profiles, may lead to "panelist fatigue," an industry term used to describe frustration experienced by a participant participating in a project.

2) Incentivize

If the screener is expected to be complex or longer than expected, many offer an incentive to participants who complete it but do not qualify for the main questionnaire. Health care studies, in particular, have almost 10 to 15 screening questions. It is very frustrating for panelists to get screened out after spending the last five minutes going through the questions. A small incentive can build trust among panelists and encourage them to continue.

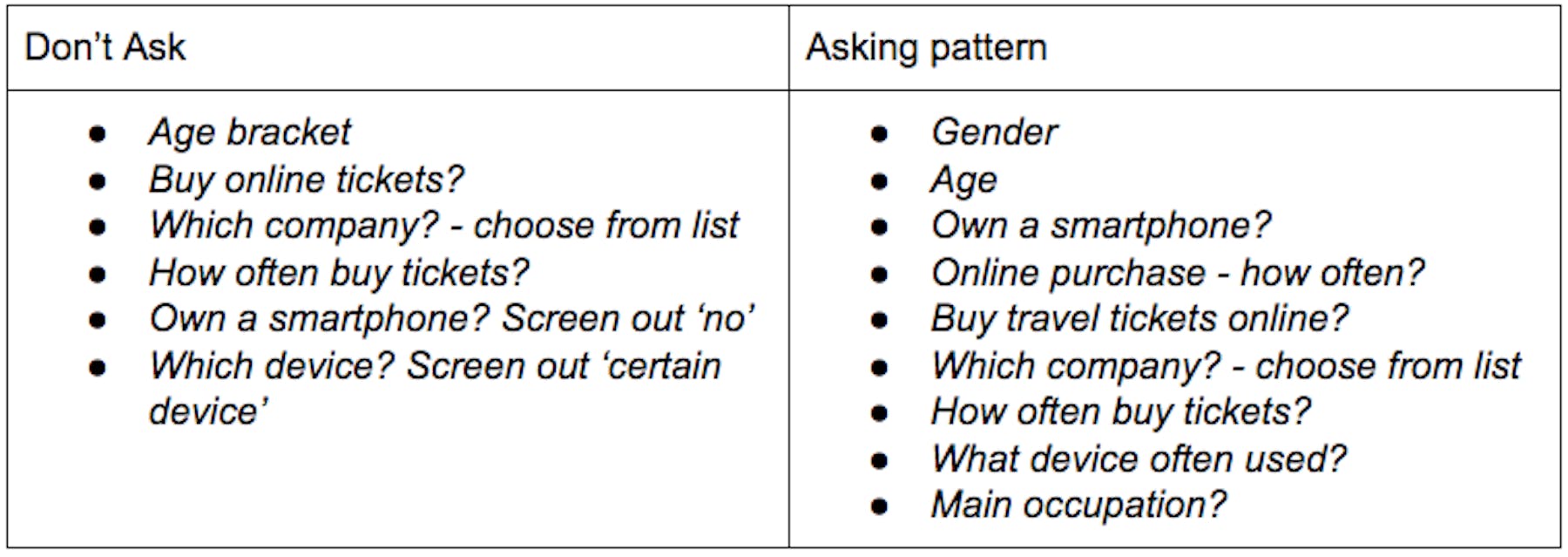

3) Start broad and get narrower

Funnel the questions to go from a wider topic first with the follow-up questions becoming more specific.

Here’s a general sequence for surveys with more than five screener questions:

1. Start easy — Warm up questions

2. Most difficult questions — Take time and need thinking

3. End with general questions — Easy to answer and of broad application

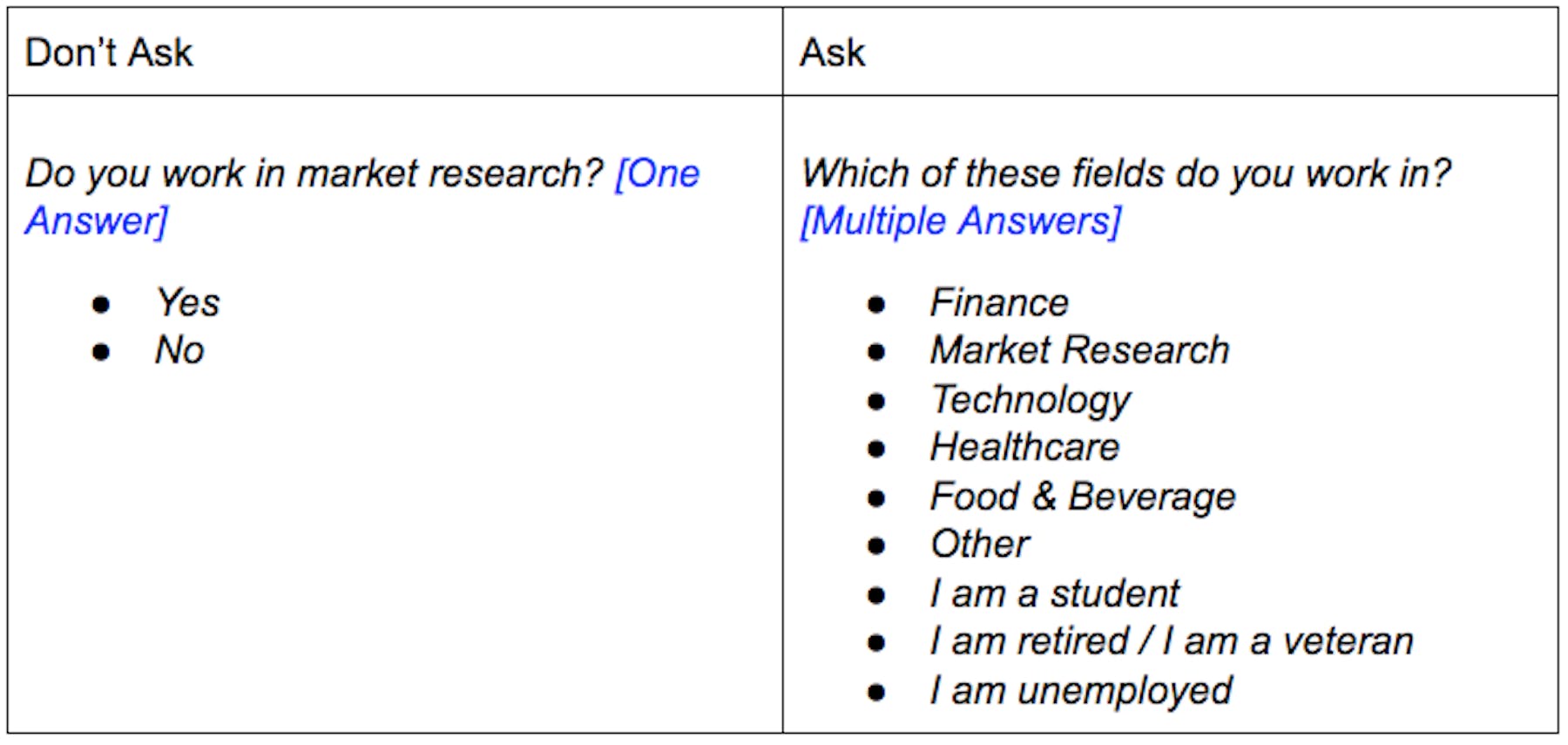

4) Stay away from the single-choice

Avoid "one answer" questions. Instead, use "multiple answer" questions. With one answer questions, participants have a 50% chance of qualifying, which decreases the credibility of the project. Some participants will tend to lie, just so they can qualify for the project.

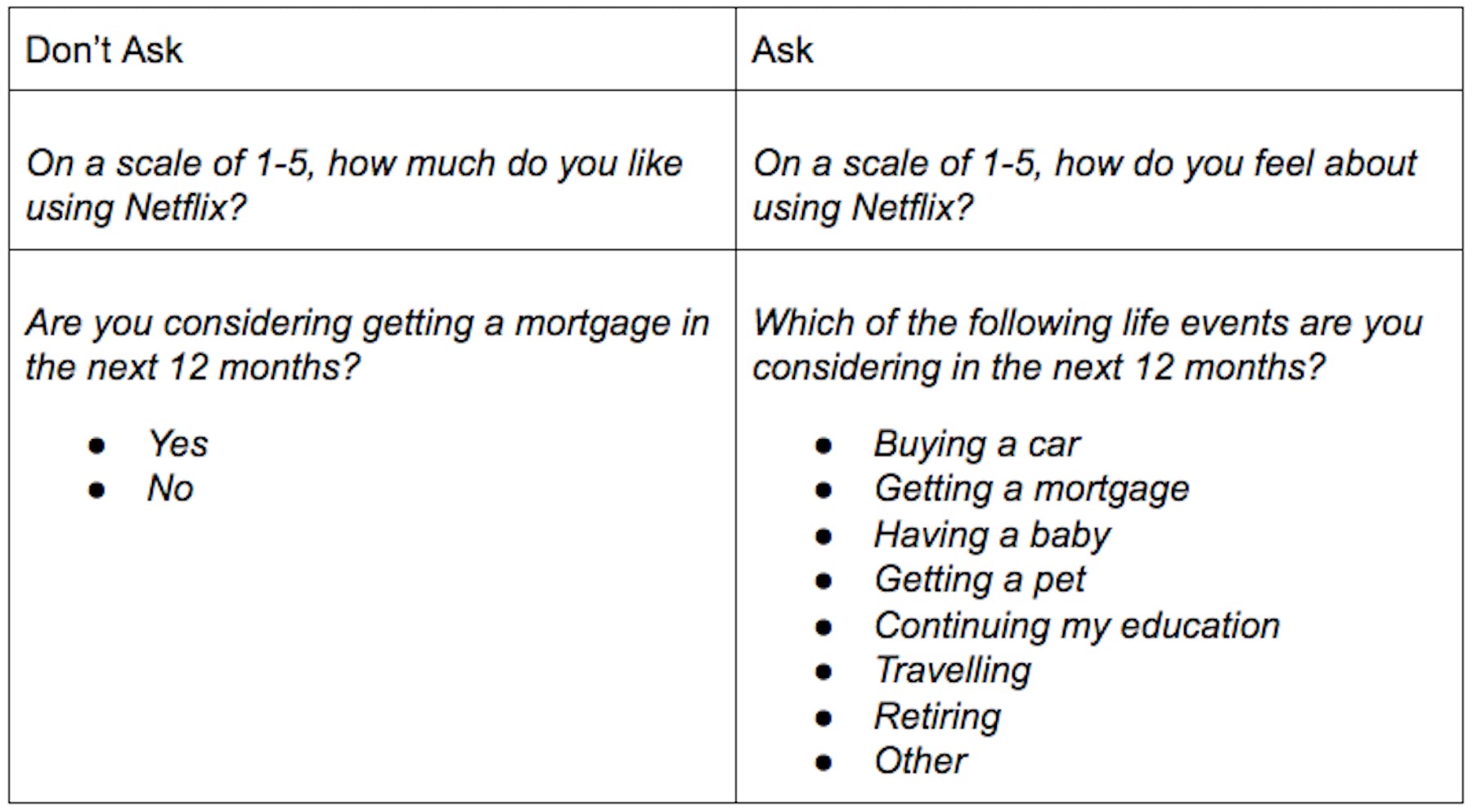

5) Do not ask leading questions

Leading questions can influence an individual to provide a particular response. Answers chosen from a list are more reliable.

6) Use clear and concise language

Avoid sentences that could confuse participants. Avoid jargon, acronyms or abbreviations. Once you’ve sent out an online survey, you will not have an opportunity to clarify any question. Therefore, make sure that participants will understand the language used in the questionnaire.

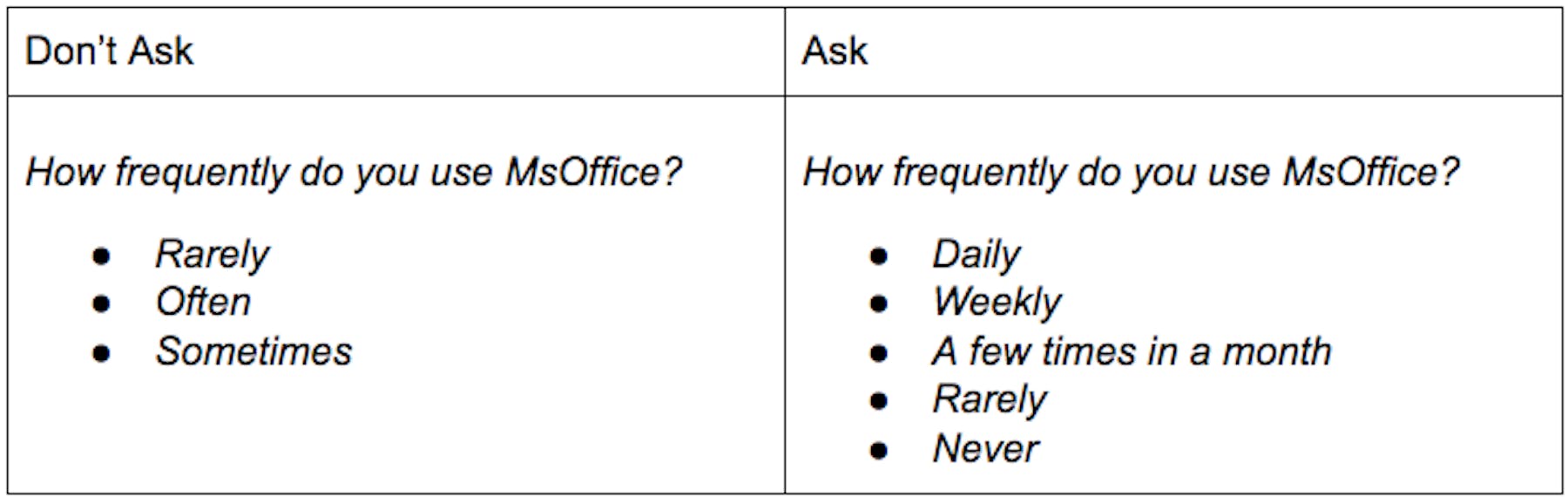

7) Be specific when defining frequency

Asking questions with answer choices such as "often, rarely, or sometimes," is confusing and subjective. Some participants may define often as once a week, while others may define it as is every day.

8) Use "Other"

Provide the option for participants to select "Other," "I don’t know," or "None of the above" as answer options, when a list is not all-encompassing. This will yield better data, by preventing participants from selecting inaccurate answers just to proceed to the next question.

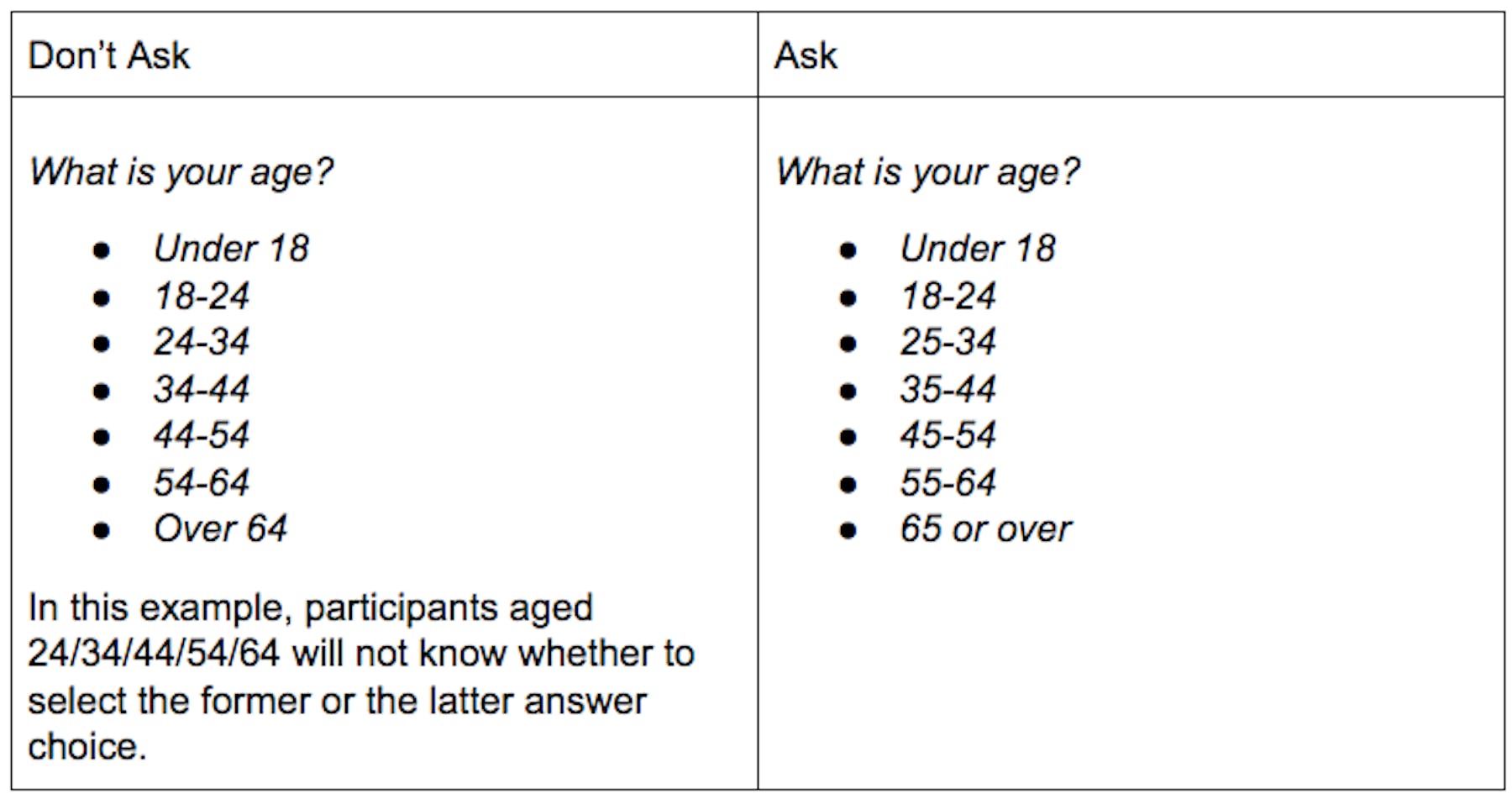

9) Provide clear answer choices

Avoid answers that overlap or cause confusion. A common example we see, is overlapping age or income bracket answer choices.

10) Limit the number of open-ended questions

Asking too many open-ended questions leads to panelist fatigue and drop-outs. Participants tend to type gibberish when they are forced to answer too many open-ended or repetitive questions.

Additionally, the researcher is forced to read through hundreds of responses to manually determine who qualifies or disqualifies from a project.

11) Edit, Test and Revise

Finally, be sure to edit and revise your screener at least twice. Test your screener yourself by going through it and submitting real answers. Then, send it to colleagues and ask them to do the same. This process will help you understand what it feels like to answer the screener, so that you can cut anything extraneous.

There are many moving parts to consider when putting together a UX study to ensure it’s successful. Writing a screener is one of the first vital steps. It takes practice to make clear, efficient screeners, but it’s necessary so you can be sure your target users are represented in your studies.

Watch a demo

See how easy it is to get fast feedback on a website, prototype, design, or more in this demo.